Political polls are widely conducted as a tool to gauge public opinion on various issues, candidates, and policies, playing a crucial role in shaping political strategies and informing voters. These surveys are typically carried out by media organizations, research institutions, and political campaigns to measure sentiment, predict election outcomes, and identify trends within the electorate. While polls provide valuable insights, their accuracy and reliability depend on factors such as sample size, methodology, and timing, making them both influential and sometimes controversial in the political landscape.

| Characteristics | Values |

|---|---|

| Frequency | Regularly, especially during election seasons. In the U.S., polls are often conducted daily or weekly by major organizations like Gallup, Pew Research Center, and FiveThirtyEight. |

| Methods | Telephone interviews (landline and mobile), online panels, in-person interviews, and mail surveys. Increasingly, online and mobile methods are dominant. |

| Sample Size | Varies widely, typically ranging from 1,000 to 2,000 respondents per poll, depending on the organization and scope. |

| Margin of Error | Usually between ±2% to ±5%, depending on sample size and population diversity. |

| Timing | Conducted year-round but more frequently during election years, primaries, and key political events. |

| Sponsors | Media outlets (e.g., CNN, Fox News), research organizations (e.g., Pew, Quinnipiac), political campaigns, and universities. |

| Purpose | Measure public opinion on candidates, policies, and issues; predict election outcomes; and track trends over time. |

| Demographics | Often stratified by age, gender, race, education, income, and geographic location to ensure representativeness. |

| Transparency | Reputable polls disclose methodology, sample size, margin of error, and funding sources. |

| Accuracy | Varies; accuracy depends on sampling methods, response rates, and weighting techniques. Recent challenges include declining response rates and partisan non-response. |

| Regulation | No formal regulation, but organizations like the American Association for Public Opinion Research (AAPOR) set ethical standards. |

| Recent Trends | Increased use of online polling, focus on likely voters, and integration of big data and AI for predictive modeling. |

Explore related products

What You'll Learn

- Sampling Methods: Random vs. targeted sampling techniques used in political polling for accuracy

- Question Wording: Impact of phrasing on poll results and respondent bias

- Timing of Polls: How election proximity affects poll outcomes and public perception

- Margin of Error: Statistical reliability and confidence levels in poll predictions

- Online vs. Phone Polls: Differences in response rates and demographic representation between methods

Sampling Methods: Random vs. targeted sampling techniques used in political polling for accuracy

Political polls are only as reliable as the samples they draw from. The choice between random and targeted sampling techniques is a critical decision that directly impacts the accuracy of polling results. Random sampling, the gold standard in statistical research, involves selecting individuals from a population without bias, ensuring every person has an equal chance of being chosen. This method is prized for its ability to produce representative samples, which are essential for generalizing findings to the broader population. For instance, a random sample of 1,000 voters from a diverse electorate can provide a reliable snapshot of public opinion, assuming proper weighting for demographics like age, gender, and region.

In contrast, targeted sampling deliberately selects specific subgroups within a population based on predetermined criteria. This approach is often used when researchers need to focus on particular demographics, such as undecided voters, young adults, or residents of swing states. While targeted sampling can yield deeper insights into these groups, it risks introducing bias if the sample is not carefully balanced. For example, a poll targeting only college-educated voters might overrepresent liberal-leaning opinions, skewing the overall results.

The trade-off between these methods lies in their precision versus generalizability. Random sampling excels at capturing the diversity of a population but may dilute the voices of smaller, yet significant, subgroups. Targeted sampling, on the other hand, amplifies these voices but limits the ability to make broad conclusions. Pollsters often employ a hybrid approach, using random sampling as a foundation and supplementing it with targeted oversamples to ensure adequate representation of key groups.

Practical considerations also influence the choice of sampling method. Random sampling requires larger sample sizes to achieve statistical significance, which can be costly and time-consuming. Targeted sampling, while more efficient for specific inquiries, demands meticulous planning to avoid bias. For instance, a poll focusing on Hispanic voters might use stratified sampling, dividing the population into subgroups based on language preference or country of origin to ensure a balanced sample.

Ultimately, the key to accurate political polling lies in understanding the strengths and limitations of each sampling technique. Random sampling provides a robust framework for generalizable results, while targeted sampling offers nuanced insights into specific populations. By combining these methods thoughtfully, pollsters can navigate the complexities of public opinion and deliver data that truly reflects the electorate.

Are Political Conventions Tax Deductible? Understanding the Rules and Limits

You may want to see also

Question Wording: Impact of phrasing on poll results and respondent bias

The way a question is phrased in a political poll can significantly alter the results, often leading to respondent bias. For instance, asking, "Do you support the government's handling of the economy?" may yield different responses compared to, "Do you think the government has failed to manage the economy effectively?" The former invites a neutral or positive response, while the latter primes respondents to consider negative outcomes. This subtle shift in wording can skew results, highlighting the importance of careful question design in polling.

Consider the impact of leading questions, which can unintentionally guide respondents toward a particular answer. For example, a poll asking, "How concerned are you about the rising national debt under the current administration?" implicitly assigns blame to the administration, potentially inflating negative responses. To mitigate this, pollsters should use balanced language, such as, "What is your level of concern about the national debt?" This neutral phrasing allows respondents to form their own opinions without external influence.

A comparative analysis of question types reveals that open-ended questions often yield more nuanced responses but are harder to quantify. For instance, asking, "What issues are most important to you in this election?" provides rich qualitative data but requires extensive coding and analysis. In contrast, closed-ended questions like, "Which of the following issues is most important: healthcare, education, or the economy?" are easier to measure but may limit respondents’ ability to express their true priorities. Pollsters must weigh these trade-offs when designing surveys.

Practical tips for minimizing bias include pre-testing questions with a small, diverse sample to identify ambiguous or leading phrasing. For example, a question like, "Should taxes be increased to fund public services?" could be revised to, "What is your opinion on using tax increases to fund public services?" to reduce loaded language. Additionally, using clear, concise language and avoiding jargon ensures that respondents of all age groups and educational levels can understand the question. For instance, replacing "fiscal policy" with "government spending plans" can improve comprehension among younger or less politically engaged respondents.

Ultimately, the impact of question wording on poll results cannot be overstated. A well-designed question should be neutral, clear, and free of leading language to ensure accurate responses. Pollsters must remain vigilant in their question design, recognizing that even minor changes in phrasing can produce major shifts in public opinion data. By prioritizing transparency and precision, polls can better reflect the true sentiments of the population, enhancing their credibility and utility in political discourse.

Is It Politically Correct? Navigating Modern Sensitivities and Social Norms

You may want to see also

Timing of Polls: How election proximity affects poll outcomes and public perception

The timing of political polls is a critical factor that can significantly influence their outcomes and how the public perceives them. As elections draw nearer, polls often become more volatile, reflecting the heightened engagement and shifting sentiments of voters. For instance, a poll conducted six months before an election might show a stable lead for one candidate, but as the election approaches, margins can narrow or even flip due to factors like media coverage, debates, and last-minute campaign strategies. This phenomenon underscores the importance of interpreting poll results within the context of their timing.

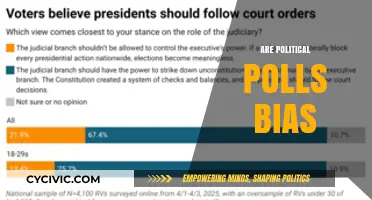

Consider the practical steps for understanding the impact of election proximity on polls. First, track polling trends over time rather than focusing on a single snapshot. Platforms like FiveThirtyEight or RealClearPolitics aggregate polls and provide historical data, allowing you to observe how numbers evolve as elections near. Second, pay attention to the margin of error, which typically ranges from ±3% to ±5% for reputable polls. As elections approach, even small shifts within this range can signal meaningful changes in voter sentiment. Finally, compare polls conducted by different organizations to identify patterns or outliers, as methodologies and sample sizes can vary.

A comparative analysis reveals that polls conducted within the final two weeks of an election often align more closely with actual results than those taken earlier. This is because undecided voters tend to make up their minds, and turnout efforts by campaigns intensify. For example, in the 2020 U.S. presidential election, polls taken in September showed a wider lead for Joe Biden, but by late October, the gap had narrowed significantly, mirroring the eventual close race. However, exceptions exist, such as in the 2016 U.K. Brexit referendum, where late polls underestimated the "Leave" vote, highlighting the unpredictability of last-minute shifts.

From a persuasive standpoint, campaigns and media outlets must exercise caution when using polls as election day nears. Overemphasizing late-stage polls can create a bandwagon effect, where voters assume a candidate’s victory is inevitable, potentially suppressing turnout. Conversely, underdog candidates may use close poll numbers to rally supporters. For the public, the takeaway is clear: treat late polls as a guide, not a guarantee. Stay informed, critically evaluate poll methodologies, and remember that the only poll that truly matters is the one on election day.

Mapping Power: How Cartography Shapes Political Narratives and Borders

You may want to see also

Explore related products

Margin of Error: Statistical reliability and confidence levels in poll predictions

Political polls are ubiquitous during election seasons, but their accuracy hinges on a critical concept: the margin of error. This statistical measure quantifies the uncertainty in poll results, reflecting the range within which the true population value likely falls. For instance, a poll showing Candidate A leading with 48% support and a margin of error of ±3% means the actual support could be as low as 45% or as high as 51%. Understanding this range is essential for interpreting poll results without overconfidence.

To grasp the margin of error, consider its relationship to sample size and confidence levels. A larger sample size reduces the margin of error because it provides a more accurate snapshot of the population. For example, a poll of 1,000 respondents typically has a margin of error of ±3%, while a poll of 500 respondents increases to ±4.4%. Confidence levels, often set at 95%, indicate the probability that the true value lies within the margin of error. This means that if 100 polls were conducted, 95 of them would capture the true value within the specified range.

Practical interpretation of margins of error requires caution. When two candidates’ support levels overlap within their respective margins of error, the race is statistically tied. For example, if Candidate A has 48% support (±3%) and Candidate B has 46% support (±3%), the true values could overlap, rendering the lead statistically insignificant. Journalists and analysts must communicate this nuance to avoid misleading the public.

To improve reliability, pollsters employ techniques like stratified sampling and weighting to ensure the sample reflects the population’s demographics. However, even with these adjustments, margins of error persist due to inherent variability in human behavior and response rates. For instance, a poll with a low response rate may overrepresent certain groups, skewing results despite a small margin of error.

In conclusion, the margin of error is not a flaw but a feature of polling, providing a transparent measure of uncertainty. By understanding its role, readers can critically evaluate poll predictions, recognizing that even small margins can mask significant ambiguity. Always ask: What is the margin of error, and does it render the reported lead meaningful? This question transforms passive consumers of poll data into informed interpreters.

Black Lives Matter: Unraveling the Intersection of Activism and Politics

You may want to see also

Online vs. Phone Polls: Differences in response rates and demographic representation between methods

Political polls have long relied on phone surveys, but the rise of online polling has introduced a new dynamic to data collection. One critical difference between these methods lies in response rates. Phone polls, particularly landline surveys, traditionally boasted higher response rates due to their intrusive nature—a ringing phone demands attention. However, as landline usage declines and caller ID allows screening, response rates have plummeted to single digits in many cases. Online polls, on the other hand, often achieve higher participation through convenience and anonymity, though they rely heavily on panel recruitment and incentives. A 2020 Pew Research study found that online polls had a response rate of around 10%, compared to 6% for phone surveys, highlighting a shift in engagement patterns.

Demographic representation is another area where online and phone polls diverge sharply. Phone polls tend to overrepresent older adults, particularly those aged 65 and above, who are more likely to have landlines and answer calls from unknown numbers. Conversely, younger demographics, especially those under 30, are vastly underrepresented in phone surveys due to their reliance on mobile phones and reluctance to engage with cold calls. Online polls, however, capture a broader age range, with higher participation from younger and middle-aged adults. Yet, they often underrepresent low-income individuals and those with limited internet access, creating a digital divide. For instance, a 2019 study by the American Association for Public Opinion Research (AAPOR) found that online polls had 40% fewer respondents from households earning under $30,000 annually compared to phone surveys.

To address these disparities, pollsters must employ strategic sampling techniques. For phone polls, this might involve increasing mobile phone sampling and offering small incentives to boost response rates among younger demographics. Online polls, meanwhile, should focus on diversifying panels to include underrepresented groups, such as rural residents and low-income households. Combining methods—a practice known as mixed-mode polling—can also improve representation. For example, a 2021 election poll by NPR used both phone and online surveys, achieving a more balanced demographic profile than either method alone.

Despite these efforts, bias remains a challenge. Online polls are susceptible to self-selection bias, as participants must opt into panels, while phone polls face non-response bias due to declining participation. Pollsters must weigh these trade-offs when choosing a method. For instance, a campaign targeting older voters might prioritize phone polls, while one focusing on urban millennials could lean toward online surveys. Ultimately, understanding the strengths and limitations of each method is key to interpreting poll results accurately.

In practical terms, poll consumers should scrutinize methodology before drawing conclusions. Questions to ask include: What was the response rate? How were participants recruited? Was the sample weighted to correct demographic imbalances? By considering these factors, one can better assess whether a poll’s findings are reliable or skewed by methodological choices. Whether online or by phone, the goal remains the same: to capture public opinion as accurately as possible, despite the inherent challenges of each approach.

How Political is Political Consumption? Unraveling the Impact of Choices

You may want to see also

Frequently asked questions

Political polls are typically conducted through various methods, including telephone interviews, online surveys, in-person interviews, and mail questionnaires. The choice of method depends on the target population, budget, and desired accuracy.

Political polls are conducted by research organizations, media outlets, universities, and polling firms. Examples include Gallup, Pew Research Center, and Quinnipiac University, as well as news organizations like CNN and Fox News.

Political polls are not always accurate due to factors like sampling errors, response bias, non-response bias, and changes in public opinion over time. Accuracy also depends on the poll's methodology and sample size.

The frequency of political polls varies depending on the context, such as election cycles or major political events. During election seasons, polls may be conducted weekly or even daily, while in non-election periods, they are less frequent.