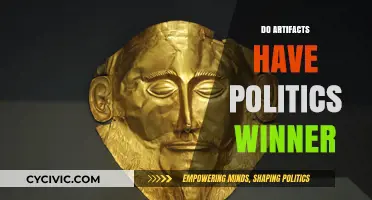

The concept of artifacts having politics challenges the notion that technology and design are neutral, arguing instead that they inherently embody values, biases, and power structures. Coined by Langdon Winner in his influential essay, this idea explores how the design and implementation of artifacts—ranging from bridges to software—reflect and reinforce societal norms, often invisibly shaping human behavior and opportunities. A review of this topic delves into the ways in which artifacts can either perpetuate inequality or foster inclusivity, prompting critical examination of the intentions behind their creation and their broader societal impacts. By analyzing case studies and theoretical frameworks, such a review highlights the ethical responsibility of designers, engineers, and policymakers to create artifacts that align with democratic values and promote equitable outcomes.

| Characteristics | Values |

|---|---|

| Author | Langdon Winner |

| Publication Year | 1980 |

| Main Argument | Artifacts (technologies) embody political qualities and can reinforce or challenge social and political structures. |

| Key Concepts | Inherent politics of artifacts, technological determinism, social shaping of technology |

| Examples Discussed | Robert Moses' low bridges (exclusionary design), atomic bomb, pesticides |

| Critique of Neutrality | Rejects the idea that technologies are neutral tools, emphasizing their embedded values and biases. |

| Influence | Foundational text in Science and Technology Studies (STS) and political philosophy. |

| Methodology | Philosophical analysis, case studies, historical examination |

| Relevance Today | Applies to contemporary issues like AI bias, surveillance technologies, and environmental technologies. |

| Controversies | Debates over the extent to which artifacts independently shape politics vs. human agency. |

| Interdisciplinary Impact | Bridges philosophy, sociology, political science, and engineering. |

Explore related products

What You'll Learn

Design's inherent values and biases

Artifacts, from the layout of a city to the design of a smartphone, are not neutral. They embody the values, assumptions, and biases of their creators, often in ways that are invisible to the untrained eye. Consider the curb cuts originally designed for wheelchair accessibility—they now benefit parents with strollers, delivery workers, and cyclists. This example illustrates how design choices, though seemingly minor, can embed inclusivity or exclusion, shaping societal norms and behaviors.

To uncover inherent biases, examine the intended user. A voice recognition system trained primarily on male voices will struggle with female or non-binary users, perpetuating gender bias. Similarly, a fitness tracker that defaults to a 70kg male body type excludes diverse users, reinforcing narrow health standards. Designers must ask: Whose needs are prioritized? Whose experiences are ignored? These questions reveal the power dynamics embedded in every artifact.

Practical steps can mitigate biased design. First, diversify design teams to include perspectives from various ages, genders, cultures, and abilities. Second, conduct user testing with a broad demographic to identify blind spots. For instance, a study of smart home devices found that older adults struggled with complex interfaces, highlighting the need for simplicity and clarity. Third, adopt ethical frameworks like the "Equity-Centered Design" approach, which explicitly addresses systemic inequalities.

Caution is necessary when relying on data-driven design. Algorithms trained on historical data often inherit past biases, such as facial recognition systems misidentifying people of color. To counteract this, audit datasets for representation gaps and implement fairness metrics. For example, a healthcare app should ensure its algorithms perform equally well across all racial and ethnic groups, avoiding harmful disparities in treatment recommendations.

Ultimately, recognizing designs’ inherent values and biases transforms artifacts from passive tools into active agents of change. By embedding equity and inclusivity into the design process, creators can challenge societal norms rather than reinforce them. A well-designed artifact doesn’t just solve a problem—it reflects a vision of the world as it should be, not as it is. This shift requires intentionality, humility, and a commitment to questioning the status quo at every stage of creation.

Maintaining Political Neutrality: Strategies for Balanced and Unbiased Engagement

You may want to see also

Technological determinism vs. social shaping

The debate between technological determinism and social shaping hinges on whether technology drives societal change or if society molds technology to its needs. Technological determinism posits that innovations like the printing press or the internet inherently reshape culture, economics, and politics, often in predictable ways. For instance, the widespread adoption of smartphones didn’t just change communication—it altered daily routines, work patterns, and even mental health dynamics. This view treats technology as an autonomous force, marching forward with its own logic, leaving society to adapt.

Contrast this with social shaping, which argues that technology is a product of human intentions, values, and power structures. Consider the design of urban infrastructure: bike lanes, highways, and public transit systems aren’t neutral; they reflect decisions about mobility, accessibility, and environmental priorities. A city prioritizing car traffic over pedestrian walkways isn’t shaped by the inherent nature of vehicles but by policy choices that favor speed over community interaction. This perspective emphasizes that artifacts are imbued with the politics of their creators, not just their functionality.

To illustrate, examine the case of facial recognition technology. From a determinist perspective, its increasing accuracy and ubiquity inevitably leads to heightened surveillance, eroding privacy regardless of societal intent. Yet, social shaping highlights how its deployment varies: in China, it’s a tool of state control, while in Europe, strict regulations limit its use to protect civil liberties. The same artifact, different political outcomes—proof that technology’s impact isn’t predetermined but negotiated.

Practical takeaways emerge from this tension. For policymakers, recognizing the social shaping of technology means actively steering innovation toward equitable outcomes. For example, designing AI systems with transparency and accountability can mitigate biases baked into algorithms. Conversely, understanding technological determinism’s limits reminds us that not all consequences are foreseeable—a caution to approach new tools with humility and adaptability.

Ultimately, the dichotomy isn’t about choosing sides but acknowledging the interplay. Technology doesn’t exist in a vacuum, nor does society passively receive it. Artifacts carry politics because they are both shaped by and shape the world around them. Navigating this dynamic requires a dual lens: one that critiques technology’s role in society while demanding society’s active role in shaping technology.

Mastering Polite Responses: Effective Communication Strategies for Every Situation

You may want to see also

Artifacts as political actors

Artifacts, from the design of a city grid to the interface of a voting machine, are not neutral. They embody and enact political choices, often invisibly shaping behavior and reinforcing power structures. Consider the low-clearance bridges in New York’s Long Island Parkway, designed in the 1920s to prevent buses (and, by extension, lower-income residents) from accessing affluent areas. This example illustrates how artifacts can be deliberate tools of exclusion, their form reflecting and enforcing social hierarchies. Such designs are not accidents but intentional acts of political engineering, baked into the material world.

To analyze an artifact’s political role, ask: *Whose needs does it serve? Whose does it ignore?* Take the case of the QWERTY keyboard layout, often cited as a lock-in mechanism that prioritizes historical convenience over ergonomic efficiency. Its persistence demonstrates how artifacts can perpetuate systems that favor established power players, even at the expense of user well-being. This isn’t merely a technical issue—it’s a political one, as it limits innovation and maintains the status quo. Such analyses require shifting focus from *what* an artifact does to *whom* it benefits.

When designing or critiquing artifacts, adopt a political lens by following these steps: 1) Identify stakeholders—not just users, but those indirectly affected. 2) Map power dynamics—who controls the artifact’s creation, distribution, and use? 3) Anticipate unintended consequences—how might it reinforce inequality or create new forms of exclusion? For instance, facial recognition systems, while marketed as neutral tools, disproportionately misidentify people of color, embedding racial bias into technology. By treating artifacts as political actors, designers and users can challenge harmful defaults and advocate for equitable alternatives.

A cautionary note: attributing politics to artifacts risks oversimplifying complex systems. Not all consequences are intentional, and not every design flaw is a conspiracy. However, this doesn’t absolve creators of responsibility. The takeaway is to approach artifacts critically, recognizing their potential to either entrench or disrupt power. For example, open-source software challenges corporate monopolies by redistributing control, while smart city sensors can either enhance public services or become tools of surveillance. The choice lies in how we design, deploy, and contest these tools. Artifacts are not passive—they are actors in the political theater of everyday life, and their scripts are ours to rewrite.

How Television Shapes Political Opinions and Voter Behavior

You may want to see also

Explore related products

Power dynamics in technology use

The design and implementation of technology are never neutral, especially when considering the power dynamics at play. Take the example of facial recognition systems, which have been shown to exhibit racial and gender biases due to the lack of diversity in training datasets. These biases are not inherent to the technology itself but are embedded through the choices made by developers and the societal structures they operate within. When a system misidentifies a person of color at a rate significantly higher than a white individual, it perpetuates existing power imbalances, reinforcing systemic discrimination under the guise of objectivity.

To address these power dynamics, it’s essential to adopt a participatory design approach, involving marginalized communities in the development process. For instance, when designing healthcare apps for low-income populations, developers must engage directly with users to understand their needs, constraints, and cultural contexts. This ensures that the technology serves as a tool for empowerment rather than exclusion. Practical steps include conducting focus groups, offering training sessions, and providing feedback mechanisms that allow users to shape the technology’s evolution. Without such inclusivity, even well-intentioned innovations risk amplifying inequalities.

Consider the case of smart city technologies, often marketed as solutions for urban efficiency. While these systems optimize traffic flow or energy use, they frequently prioritize the needs of affluent residents and businesses, sidelining the concerns of marginalized groups. For example, surveillance cameras installed in low-income neighborhoods may deter crime but also create a sense of constant monitoring, eroding trust and autonomy. Here, the power dynamic is clear: the technology benefits those who control it, while those under its gaze bear the costs. Policymakers must balance innovation with equity, ensuring that technological advancements do not entrench social hierarchies.

A persuasive argument for rethinking power dynamics in technology use lies in the long-term societal impact. When technologies like predictive policing algorithms disproportionately target minority communities, they undermine public trust and perpetuate cycles of injustice. Conversely, tools designed with fairness and accountability—such as open-source algorithms with transparent decision-making processes—can foster greater equity. Advocates should push for regulatory frameworks that mandate bias audits, diversity in tech teams, and community oversight. By doing so, we can shift the narrative from technology as a tool of control to one of collective progress.

Finally, a comparative analysis of global technology use reveals how power dynamics vary across cultural and political contexts. In authoritarian regimes, technologies like social media monitoring are wielded to suppress dissent, while in democratic societies, they may be used to amplify marginalized voices. This duality underscores the importance of context in shaping technological outcomes. For instance, while digital payment systems in Kenya (e.g., M-Pesa) have empowered millions by providing access to financial services, similar technologies in other regions have been criticized for exploiting users through high fees and data harvesting. Understanding these nuances is crucial for crafting policies that harness technology’s potential while mitigating its risks.

Is Hillary Clinton's Political Career Truly Over? Analyzing Her Future

You may want to see also

Ethical implications of artifact design

Artifacts, from the design of a smartphone to the layout of a city, embed values and biases that shape human behavior and societal norms. Consider the ethical implications of facial recognition technology: its design often reflects the demographics of its creators, leading to higher error rates for people of color and women. This isn’t a neutral flaw but a political outcome, rooted in who is included—or excluded—from the design process. Such biases amplify existing inequalities, turning a tool meant for security into a mechanism of discrimination. This example underscores how artifact design isn’t just about functionality but about whose interests are prioritized.

Designers must adopt a proactive ethical framework to mitigate harm. Start by conducting diversity audits during the prototyping phase, ensuring that test groups represent a wide range of ages, ethnicities, and abilities. For instance, a voice assistant should be trained on datasets that include accents from non-native English speakers, reducing frustration for millions of users. Similarly, medical devices like pulse oximeters, historically less accurate on darker skin tones, require recalibration to ensure equitable health outcomes. These steps aren’t optional—they’re essential to prevent artifacts from perpetuating systemic injustices.

A persuasive argument for ethical design lies in its long-term benefits. Companies that prioritize inclusivity in their products often gain broader market appeal and consumer trust. For example, Microsoft’s AI principles, which emphasize fairness and accountability, have positioned them as a leader in responsible tech. Conversely, firms that ignore ethical considerations risk backlash, as seen with Amazon’s Rekognition software, which faced widespread criticism for its misuse by law enforcement. Ethical design isn’t just a moral imperative; it’s a strategic advantage in an increasingly conscious marketplace.

Finally, consider the role of regulation in shaping ethical artifact design. While self-governance is ideal, industries often require external standards to ensure compliance. The European Union’s GDPR sets a precedent for how data-driven artifacts should protect user privacy, forcing companies worldwide to rethink their designs. Similarly, proposed regulations on AI transparency could mandate that algorithms disclose their limitations and biases. Such measures don’t stifle innovation—they ensure it serves the public good. Designers and policymakers must collaborate to create frameworks that balance creativity with accountability, ensuring artifacts empower rather than exploit.

How Long Should You Stay at a Funeral: Etiquette Guide

You may want to see also

Frequently asked questions

The main argument is that technological artifacts are not neutral; they embody political values and ideologies, often reflecting the priorities and biases of their creators or the societies in which they are developed.

It was written by Langdon Winner, a political theorist and philosopher, and published in 1980 in the journal *Daedalus*.

Winner cites the low clearance heights of bridges on Long Island parkways, designed by Robert Moses, as an example. These bridges prevented buses (often used by lower-income or minority groups) from accessing certain areas, effectively excluding them from public spaces.

The essay challenges the notion of technological neutrality, encouraging critical analysis of how design choices in technology can reinforce or challenge existing power structures, inequalities, and social norms.