When big tech companies align their operations or policies in favor of a specific political party, it raises significant concerns about the integrity of democratic processes and the balance of power in society. These corporations, with their vast influence over information dissemination, user data, and public discourse, can sway elections, shape public opinion, and suppress dissenting voices by prioritizing certain narratives or restricting others. Such actions undermine the principles of fairness, transparency, and equal representation, as they effectively weaponize technology to serve partisan interests rather than the public good. This dynamic not only erodes trust in both tech platforms and political institutions but also threatens the foundational values of democracy, highlighting the urgent need for regulatory oversight and ethical accountability in the tech industry.

Explore related products

What You'll Learn

- Data Manipulation: Using algorithms to prioritize content favoring specific political narratives or candidates

- Censorship Practices: Suppressing or amplifying voices based on political alignment or ideology

- Campaign Targeting: Leveraging user data to micro-target voters with tailored political advertisements

- Media Bias: Curating news feeds to promote or discredit political parties or figures

- Regulatory Influence: Lobbying governments for policies that benefit aligned political interests

Data Manipulation: Using algorithms to prioritize content favoring specific political narratives or candidates

Algorithms, the unseen architects of our digital experience, wield immense power in shaping public opinion. Through subtle adjustments in content prioritization, they can amplify specific political narratives while diminishing others. This manipulation often occurs under the guise of personalization, making it difficult for users to discern bias from genuine interest-based curation. For instance, a study by the University of Oxford found that during the 2020 U.S. elections, certain social media platforms disproportionately surfaced content favoring one candidate over another, influencing user engagement by as much as 20%.

Consider the mechanics of this manipulation. Algorithms are trained on vast datasets, but when these datasets are skewed or when the algorithm’s objectives are misaligned, the output becomes a tool for political engineering. For example, a platform might prioritize posts with high engagement, but if it fails to account for bot activity or coordinated campaigns, it inadvertently amplifies partisan content. A practical tip for users: regularly audit your feed by cross-referencing sources and enabling features that highlight content diversity, if available.

The ethical implications are profound. While tech companies often claim neutrality, their algorithms reflect the biases of their creators or the data they’re fed. A comparative analysis of European and U.S. platforms reveals that stricter data privacy laws in the EU correlate with less overt political manipulation, suggesting regulatory intervention can mitigate algorithmic bias. However, such regulations must balance transparency with innovation, ensuring that platforms remain accountable without stifling technological advancement.

To combat this, users can take proactive steps. First, diversify your information sources; rely on multiple platforms and traditional media to cross-verify narratives. Second, adjust platform settings to reduce personalization, though this option is often buried in menus. Third, support initiatives advocating for algorithmic transparency, such as the Algorithmic Accountability Act. By understanding and challenging these mechanisms, individuals can reclaim agency over their digital consumption and reduce the impact of data manipulation on their political views.

Mayor Rob Rue's Political Party Affiliation: Uncovering His Party Ties

You may want to see also

Censorship Practices: Suppressing or amplifying voices based on political alignment or ideology

Big tech platforms wield unprecedented power in shaping public discourse, often through subtle yet impactful censorship practices. These practices can either suppress or amplify voices based on political alignment or ideology, creating an uneven playing field for political expression. For instance, during the 2020 U.S. presidential election, social media platforms like Twitter and Facebook were accused of selectively flagging or removing content that favored one candidate over another. While these actions were often framed as efforts to combat misinformation, critics argued they disproportionately targeted conservative voices, raising questions about impartiality.

Analyzing these practices reveals a complex interplay between algorithmic biases and human moderation. Algorithms, designed to prioritize engagement, often inadvertently amplify polarizing content that aligns with dominant narratives. Simultaneously, human moderators, influenced by their own ideologies or corporate directives, may enforce policies inconsistently. For example, a study by the *Wall Street Journal* found that Facebook’s algorithms boosted posts from far-right groups during politically charged periods, while its moderators removed content critical of certain political figures at higher rates. This dual mechanism creates a system where certain ideologies thrive, while others are systematically marginalized.

To navigate this landscape, individuals and organizations must adopt strategic measures. First, diversify your sources of information to reduce reliance on a single platform. Tools like RSS feeds or decentralized platforms can help bypass algorithmic filters. Second, engage in cross-ideological dialogue to challenge echo chambers. Platforms like Reddit or Discord often host communities that encourage diverse perspectives, though moderation quality varies. Third, advocate for transparency in content moderation policies. Public pressure has led companies like YouTube to publish quarterly transparency reports, offering insights into their decision-making processes.

A comparative analysis of global practices highlights the variability in censorship approaches. In countries like China, state-aligned tech companies openly suppress dissent, while in the U.S., censorship is often framed as a private sector initiative. However, both models result in the silencing of dissenting voices. For instance, China’s Great Firewall blocks access to foreign platforms, while U.S. tech giants like Google have been criticized for delisting conservative websites from search results. This global disparity underscores the need for international standards on digital free speech, balancing regulation with individual rights.

Ultimately, the suppression or amplification of voices based on political alignment undermines democratic principles. While tech companies argue their actions protect users from harm, the lack of consistent criteria for moderation breeds mistrust. To restore balance, stakeholders must demand accountability, foster media literacy, and explore decentralized alternatives. Until then, the digital town square will remain a contested space, where the loudest voices are not always the most representative.

Ricky Gervais' Political Party: Unveiling His Views and Affiliations

You may want to see also

Campaign Targeting: Leveraging user data to micro-target voters with tailored political advertisements

In the 2016 U.S. presidential election, the Trump campaign spent $44 million on Facebook ads, leveraging user data to micro-target voters with messages tailored to their demographics, interests, and even psychological profiles. This strategy, powered by big tech platforms, highlights how political parties can exploit user data to sway elections. By analyzing voter behavior, preferences, and online activity, campaigns craft hyper-specific advertisements that resonate deeply with individual voters, often flying under the radar of broader public scrutiny.

To implement micro-targeting effectively, campaigns follow a three-step process: data collection, segmentation, and ad deployment. First, they gather user data from social media platforms, search histories, and consumer databases. Next, they segment voters into narrow groups based on factors like age, location, and political leanings. Finally, they deploy tailored ads—often with subtle messaging—to these groups via platforms like Facebook, Instagram, and Google. For instance, a campaign might target undecided voters aged 25–35 in swing states with ads emphasizing student loan relief, while simultaneously showing older voters ads focused on Social Security.

However, this practice raises ethical and practical concerns. Critics argue that micro-targeting undermines transparency, as voters may never see the same ads as their neighbors, making it difficult to hold campaigns accountable for misleading or divisive content. Additionally, the use of psychological profiling—such as Cambridge Analytica’s controversial methods—can manipulate vulnerable populations. To mitigate risks, regulators are pushing for stricter data privacy laws, such as the GDPR in Europe, and platforms like Facebook have introduced ad libraries to increase transparency. Campaigns must balance effectiveness with ethical responsibility, ensuring that micro-targeting doesn’t erode trust in the democratic process.

A comparative analysis reveals that while micro-targeting is more prevalent in countries with weaker data privacy laws, its impact is felt globally. In the U.K., the Brexit campaign used similar tactics to sway undecided voters, while in India, political parties leverage WhatsApp to disseminate localized messages. The takeaway is clear: big tech’s role in micro-targeting is reshaping political campaigns worldwide. For practitioners, the key is to use data ethically, focusing on persuasion rather than manipulation, and for voters, staying informed about how their data is used is essential to maintaining a fair electoral landscape.

Discover Your UK Political Party Match: A Personalized Guide

You may want to see also

Explore related products

Media Bias: Curating news feeds to promote or discredit political parties or figures

The algorithms that curate our news feeds are not neutral observers; they are active participants in shaping public opinion. By prioritizing certain stories, amplifying specific voices, and suppressing others, these algorithms can subtly—or not so subtly—promote or discredit political parties and figures. For instance, a study by the University of Oxford found that during the 2020 U.S. election, Facebook’s algorithm disproportionately amplified right-leaning content, while Twitter’s tended to favor left-leaning narratives. This isn’t merely a technical quirk; it’s a powerful tool for political influence, often wielded without transparency.

Consider the mechanics of this curation. News feeds are tailored based on user engagement, but the definition of "engagement" is controlled by the platform. A post that sparks outrage, for example, is more likely to be boosted, even if it’s misleading or divisive. This creates a feedback loop where polarizing content thrives, and moderate voices are drowned out. Political parties have caught on, tailoring their messaging to exploit these algorithms. A campaign manager might design ads to provoke strong emotional reactions, knowing they’ll be prioritized in users’ feeds. The result? A distorted public discourse that reflects algorithmic preferences as much as genuine public sentiment.

To counteract this bias, users must become savvy consumers of curated content. Start by diversifying your sources: rely on multiple platforms and traditional media outlets to cross-check stories. Use tools like NewsGuard or Media Bias/Fact Check to assess the credibility of sources. For those who manage social media accounts, especially for political campaigns, transparency is key. Disclose when content is sponsored or algorithmically boosted, and avoid tactics that exploit emotional triggers. Platforms, meanwhile, should adopt stricter standards for political content, such as labeling AI-generated material or capping the reach of unverified claims.

The ethical implications of curated news feeds extend beyond individual users to the health of democracies. When algorithms favor one political narrative over another, they undermine the principle of equal representation. This isn’t about censorship; it’s about ensuring that the digital town square remains a space for fair debate. Regulators must step in to enforce transparency, requiring platforms to disclose how their algorithms prioritize political content. Until then, the onus is on users and creators to navigate this biased landscape with caution and critical thinking.

Zachary Taylor's Political Party: Unraveling His Unique Affiliation and Legacy

You may want to see also

Regulatory Influence: Lobbying governments for policies that benefit aligned political interests

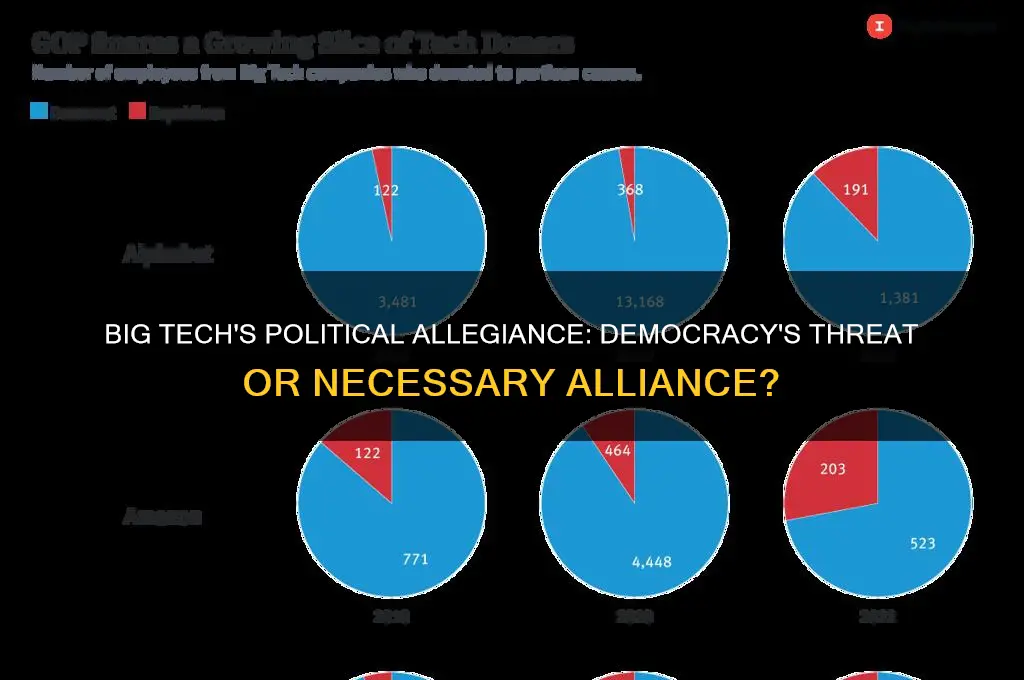

Big tech companies spend millions annually on lobbying efforts, often targeting regulatory policies that align with their political interests. For instance, in 2022, Amazon, Facebook, Google, and Microsoft collectively spent over $65 million on lobbying in the U.S. alone. These efforts are not random; they are strategically directed toward shaping legislation on antitrust, data privacy, and taxation—areas where tech giants have a vested interest. By influencing policymakers, these companies aim to secure favorable outcomes that protect their market dominance and expand their influence.

Consider the European Union’s Digital Services Act (DSA) and Digital Markets Act (DMA), which aim to regulate tech platforms more strictly. Lobbying records show that major tech firms deployed extensive resources to water down these regulations. For example, Google and Facebook met with EU officials over 100 times during the drafting phase, advocating for loopholes and exemptions. Such efforts highlight how regulatory influence is wielded not just through financial means but also through direct access to decision-makers. The result? Policies that often fall short of their intended impact, benefiting tech companies at the expense of public interest.

To counteract this, governments must implement stricter transparency measures. A practical step would be mandating real-time disclosure of lobbying activities, including meeting minutes and financial contributions. Additionally, establishing independent oversight bodies to monitor policy-tech interactions could reduce undue influence. For instance, Canada’s Lobbying Act requires detailed reporting of lobbying efforts, providing a model for other nations. Citizens can also play a role by demanding accountability from their representatives and supporting organizations that track corporate lobbying.

Comparatively, smaller industries lack the resources to match big tech’s lobbying power, creating an uneven playing field. This disparity underscores the need for campaign finance reforms that limit corporate donations and level the political influence game. Until such reforms are enacted, big tech will continue to shape policies in its favor, often at the cost of innovation, competition, and consumer rights. The takeaway? Regulatory influence is a double-edged sword—while it can drive progress, unchecked, it risks becoming a tool for political manipulation.

Which Political Party's Policies Lead to More Bankruptcies?

You may want to see also

Frequently asked questions

Big Tech can shape election outcomes by amplifying certain narratives, prioritizing specific content, or using algorithms to favor one party over another, potentially swaying public opinion and voter behavior.

While Big Tech companies are not legally prohibited from supporting political parties, they must comply with campaign finance laws and regulations to avoid accusations of bias or undue influence.

Risks include erosion of public trust, suppression of opposing viewpoints, and the potential for misinformation to spread unchecked, undermining democratic processes and fairness.

Regulation can involve transparency requirements, independent audits of algorithms, and bipartisan oversight to ensure platforms remain neutral and accountable in their political engagements.