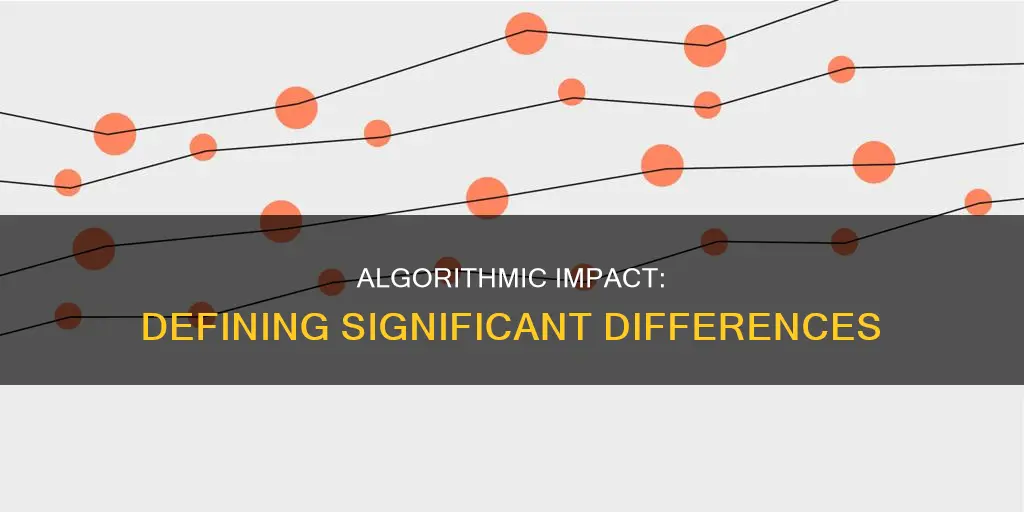

The analysis of algorithms is a critical process in computer science, aiming to determine an algorithm's efficiency in terms of computational complexity, or the time, storage, and resources required for its execution. When comparing two algorithms, the challenge lies in evaluating their performance and identifying significant differences. This process often involves implementing both algorithms as computer programs, running them on a suitable range of inputs, and measuring resource utilisation. However, this approach has limitations, including the effort involved and the potential for bias in programming and empirical test case selection. To address these challenges, asymptotic analysis is employed to estimate efficiency as input size increases, providing valuable insights for computer scientists evaluating potential algorithms for implementation. Various statistical tests, such as McNemar's test and different forms of t-tests, are also utilised to compare machine learning algorithms and determine significant differences in their performance. Ultimately, the significance of differences between algorithms lies in their impact on system performance, practicality, and efficiency.

| Characteristics | Values |

|---|---|

| Computational complexity | The amount of time, storage, or other resources needed to execute an algorithm |

| Time complexity | The number of steps it takes to execute an algorithm |

| Space complexity | The number of storage locations an algorithm uses |

| Efficiency | The algorithm's performance when the function's values are small or grow slowly compared to the growth in the size of the input |

| Run-time efficiency | The time it takes for an algorithm to execute |

| Asymptotic analysis | A technique to measure the efficiency of an algorithm as the input size becomes large |

| Replicability | The ability to replicate results |

| Type I error | False positives |

| Type II error | Not specified |

Explore related products

$30.57 $37.27

What You'll Learn

Run-time analysis

There are different types of run-time complexities, such as linear time, logarithmic time, exponential time, quasilinear time, and factorial time. These complexities are usually expressed using Big O notation, which defines the number of operations that are done on the input 'n'. For example, an algorithm with a run-time complexity of O(n) will take twice as long to run when the input size is doubled. On the other hand, an algorithm with a run-time complexity of O(log n) will only take a slightly longer time to run as the input size increases.

Empirical analysis is another method of evaluating the performance of an algorithm by running it on a set of inputs and measuring the time or resources it consumes. It provides actual data on how an algorithm behaves in practice and can complement theoretical analysis by verifying predictions and identifying unexpected behaviours. However, it is important to consider the environment in which the algorithm is running, as hardware and software can affect running time and resource consumption.

In summary, run-time analysis is a critical tool for understanding and comparing the efficiency of algorithms, and it can be performed through both theoretical and empirical methods. By examining the run-time complexity and growth rate of an algorithm, we can make informed decisions about its suitability for different applications.

God's View of Legal Marriage

You may want to see also

Efficiency

Algorithm efficiency is a critical aspect of computer science, ensuring that algorithms perform effectively without straining the system's resources. It involves optimising the utilisation of computational resources, including time and space, to achieve the desired output efficiently. The concept of efficiency in algorithms has evolved significantly since the early days of computing, where limited memory and processing power posed challenges.

Time and Space Complexity

Time and space complexity are the two primary measures for evaluating algorithm efficiency. Time complexity focuses on the number of steps or iterations required for an algorithm to complete its task, with the goal of minimising execution time. Space complexity, on the other hand, addresses the amount of memory needed for an algorithm to execute, aiming to optimise memory usage.

The efficiency of an algorithm is determined by how effectively it utilises resources, including time and space. An efficient algorithm consumes minimal computational resources, running within acceptable time and space constraints on available computers. As computational power and memory have advanced, the acceptable levels of resource consumption have also evolved.

Factors Influencing Efficiency

Several factors influence the efficiency of an algorithm. Firstly, the size of the dataset plays a crucial role. As the dataset grows, the time required to run functions on it also increases. Therefore, efficient algorithms aim to minimise the number of iterations or steps needed to complete the task relative to the dataset size.

The choice of programming language, coding techniques, compilers, and even the operating system can impact efficiency. For instance, languages interpreted by an interpreter may be slower than those implemented by a compiler. Additionally, certain processors support vector or parallel processing, which can enhance efficiency, while others may require reconfiguration to utilise these capabilities effectively.

Optimisation Techniques

To improve algorithm efficiency, various optimisation techniques can be employed. Avoiding embedded loops, minimising the use of variables, and utilising smaller datatypes to save memory are recommended practices. Additionally, specific strategies like cutoff binning can significantly enhance the execution efficiency of grid algorithms.

In conclusion, algorithm efficiency is a fundamental concept in computer science, focusing on optimising resource utilisation. By understanding and improving efficiency, programmers can ensure that algorithms run effectively without overburdening the system's resources. This continuous pursuit of efficiency drives the advancement of technology and computing power.

Understanding Contributing to Delinquency Charges

You may want to see also

Replicability

In the context of machine learning, replicability faces unique challenges due to the dynamic and complex nature of the field. To achieve replicability in machine learning, it is crucial to address several key aspects. Firstly, there must be a clear and detailed description of the algorithm, including complexity analysis, sample size, and links to the source code and dataset. Secondly, changes in algorithms, data, environments, and parameters must be meticulously tracked and recorded during experimentation. This includes dataset versioning, data distribution changes, and sample changes, as these factors can significantly impact the outcome.

Additionally, the environment in which the project was built must be captured and easily reproducible. This entails logging framework dependencies, versions, hardware used, and other environmental factors. Proper logging of these parameters is essential, as changes to the original data or methodology can make it difficult to replicate the model and obtain the same results.

Furthermore, managing and storing model checkpoints, also known as artifacts, are vital for replicability. Tools like DVC facilitate accessing data artifacts and importing them into other projects, aiding in replicating and verifying models.

Address Verification: Getting Your Driver's License

You may want to see also

Explore related products

Type I and Type II errors

In statistics, Type I and Type II errors represent two kinds of errors that can occur when making a decision about a hypothesis based on sample data. Understanding these errors is crucial for interpreting the results of hypothesis tests. In hypothesis testing, there are two competing hypotheses: the null hypothesis and the alternative hypothesis. The null hypothesis (H0) represents the default assumption that there is no effect, difference, or relationship in the population being studied. The alternative hypothesis (H1) suggests that there is a significant effect, difference, or relationship in the population.

A Type I error, also known as a false positive, occurs when the null hypothesis, which is true, is rejected. In other words, it is the error of incorrectly concluding that there is a significant effect or difference when there isn't one in reality. For example, in a courtroom scenario, a Type I error would correspond to convicting an innocent defendant. The probability of making a Type I error is represented by the significance level, or alpha (α), and is typically set at 0.05 or 5%.

On the other hand, a Type II error, or false negative, occurs when a null hypothesis that is false is not rejected. This is the error of failing to detect a significant effect or difference when one exists. For instance, in medical testing, a Type II error would occur if a person is incorrectly indicated to not have a disease when they actually do. The probability of committing a Type II error is denoted by beta (β) and is related to the power of a test, which equals 1-β.

The probabilities of Type I and Type II errors can be minimized through careful planning in study design. To reduce the likelihood of a Type I error, the alpha value can be made more stringent, such as setting it at 0.01 instead of 0.05. To decrease the probability of a Type II error, either the sample size can be increased or the alpha level can be relaxed, such as setting it at 0.1 instead of 0.05.

In the context of machine learning algorithms, Type I and Type II errors are relevant when comparing the performance of different algorithms. For example, McNemar's test, similar to the Chi-Squared test, is used to determine whether the observed proportions in an algorithm's contingency table differ significantly from the expected proportions. This helps in evaluating large deep learning neural networks that take considerable time to train.

The Constitution's Freedom from Unlawful Detention

You may want to see also

Asymptotic analysis

In computer science, asymptotic analysis is used to analyse the performance of algorithms. It helps determine the computational complexity of algorithms, which refers to the amount of time, storage, or other resources required to execute them. By using asymptotic analysis, we can evaluate the efficiency of an algorithm by considering its growth rate in terms of input size. This is often expressed using Big O notation, which describes the upper bound of an algorithm's time or space complexity.

However, it is important to note that asymptotic analysis has its limitations. It ignores constant factors and machine-specific differences, which means that in practice, an asymptotically slower algorithm may sometimes perform better for specific software or inputs. Additionally, asymptotic analysis does not provide a method for evaluating finite-sample distributions of sample statistics. Therefore, while it is a valuable tool, it should be complemented with other analysis techniques to make informed decisions about algorithm selection and design.

The Two-Party System: Constitutional or Not?

You may want to see also

Frequently asked questions

Algorithm analysis is important because using an inefficient algorithm can significantly impact system performance. It can also help determine if an algorithm is worth implementing.

The efficiency of an algorithm is determined by the amount of time, storage, or other resources needed to execute it. An algorithm is efficient when the function's values are small or grow slowly compared to the growth in the size of the input.

One way is to implement both algorithms as computer programs and run them on a suitable range of inputs, measuring how much of the resources each program uses. However, this approach is often unsatisfactory due to the effort involved in programming and testing two algorithms, the potential bias of the programmer, and the possibility of one algorithm being favoured due to the choice of empirical test cases.

McNemar's test is used to determine whether the difference in observed proportions in the algorithm's contingency table differs significantly from the expected proportions. Remco Bouckaert and Eibe Frank also recommend using either 100 runs of random resampling or 10x10-fold cross-validation with the Nadeau and Bengio correction to the paired Student-t test to achieve good replicability.