Collinearity diagnostics are used to identify multicollinearity, which occurs when two or more variables are near-perfect linear combinations of one another. Multicollinearity leads to unstable regression estimates and high standard errors, resulting in misleading statistical conclusions. Variance inflation factors (VIFs) are commonly used diagnostic tools, with a threshold of 10 often indicating the presence of multicollinearity. However, other factors such as the condition index and variance proportions are also considered. When interpreting collinearity diagnostics tables, it is typical to search for pairs or groups of predictors with variance proportion values above 0.7, 0.8, or 0.9, indicating a strong relationship and potential multicollinearity. While exactness is not required to determine multicollinearity, a strong relationship is sufficient to suggest its presence.

| Characteristics | Values |

|---|---|

| Collinearity | Two variables are near-perfect linear combinations of one another |

| Multicollinearity | More than two variables are near-perfect linear combinations of one another |

| Collinearity problem | Occurs when a component associated with a high condition index contributes strongly (variance proportion greater than about 0.5) to the variance of two or more variables |

| Multicollinearity diagnostic tools | Variance inflation factor (VIF), condition index and condition number, and variance decomposition proportion (VDP) |

| Multicollinearity detection | PROC REG provides several methods for detecting collinearity with the COLLIN, COLLINOINT, TOL, and VIF options |

| Collinearity diagnostics | Pairs in a line with variance proportion values above 0.80 or 0.70 |

| Collinearity diagnostics | If there are more than two predictors with a VIF above 10, look at the collinearity diagnostics table |

| Collinearity diagnostics | If there are lines with a Condition Index above 15, check if there is more than one predictor with values above 0.90 in the variance proportions |

| Multicollinearity in regression analysis | Centering is a way of reducing collinearity, but standardization is not recommended as it changes the coefficients |

| Multicollinearity in regression analysis | Principal component analysis or factor analysis can also generate a single variable that combines multicollinear variables |

Explore related products

$148.99 $172.95

$80

What You'll Learn

Variance proportions above 0.90 indicate collinearity problems

Collinearity refers to two variables that are near-perfect linear combinations of one another. Multicollinearity, on the other hand, involves more than two variables. In the presence of multicollinearity, regression estimates are unstable and have high standard errors.

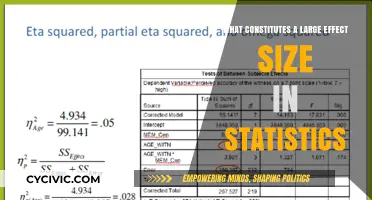

Collinearity diagnostics are used to identify multicollinearity in multiple regression models. Diagnostic tools include the variance inflation factor (VIF), condition index, condition number, and variance decomposition proportion (VDP). According to Hair et al. (2013), for each row with a high Condition Index, you search for values above 0.90 in the Variance Proportions. If you find two or more values above 0.90 in one line, you can assume that there is a collinearity problem between those predictors.

However, if only one predictor in a line has a value above 0.90, this is not indicative of multicollinearity. In such cases, it is recommended to consider pairs of predictors (or groups of predictors) with values above 0.80 or 0.70. Additionally, if there are more than two predictors with a VIF above 10, it is necessary to examine the collinearity diagnostics table to identify the lines with a Condition Index above 15.

It is important to note that collinearity or multicollinearity does not need to be exact to determine their presence. A strong relationship is sufficient to indicate significant collinearity or multicollinearity. A coefficient of determination is the proportion of variance in a response variable predicted by the regression model built upon the explanatory variable(s). R-squared (R2) values can also be used to measure multicollinearity, with R2 = 0 indicating the absence of multicollinearity and R2 = 1 indicating the presence of exact multicollinearity.

Omar, Tlaib and Their Stance on the Constitution

You may want to see also

Tolerance and variance inflation factor (VIF)

Tolerance

Tolerance is a measure of the extent to which the independent variables in a regression model are linearly independent. It quantifies the unique variance in a predictor variable that is not explained by other predictors in the model. A low tolerance value indicates high multicollinearity, while a high tolerance value suggests that the predictor variable has a unique relationship with the dependent variable.

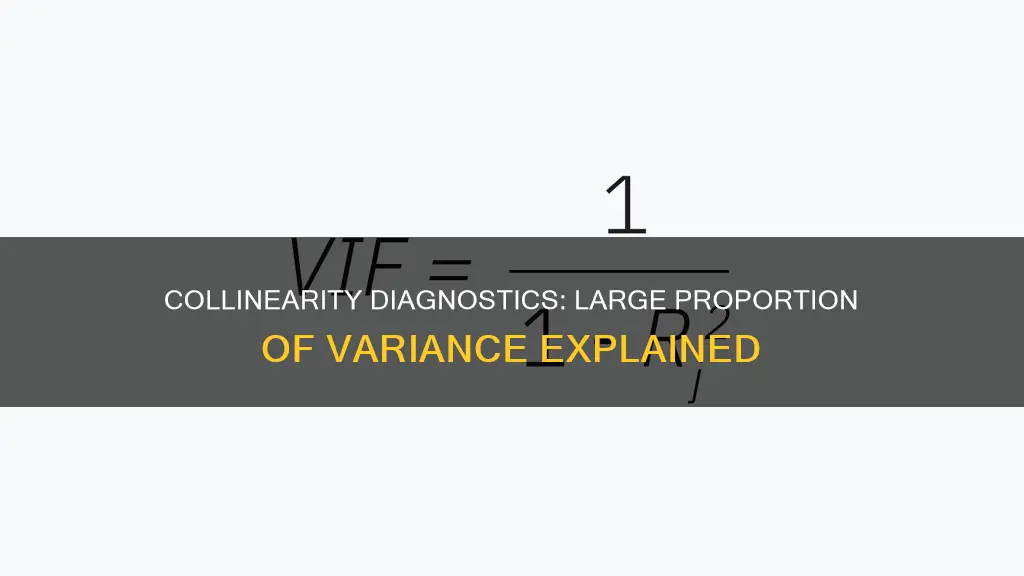

Variance Inflation Factor (VIF)

The VIF is the reciprocal of tolerance. It quantifies the amount of variance in the estimated regression coefficient that is due to multicollinearity among the predictor variables. A high VIF indicates a higher degree of multicollinearity, while a low VIF suggests that the predictor variable is relatively independent of the others.

Interpreting VIF values:

- A VIF of 1 indicates no correlation with other variables.

- Values between 1 and 5 indicate moderate correlation.

- Values above 5 indicate high correlation, with values above 10 being a significant concern.

- Some authors suggest a more conservative threshold of 2.5 as a cause for concern.

High VIF values can lead to larger confidence intervals and smaller chances of a coefficient being statistically significant. In such cases, obtaining more data or using techniques like Shapley regression can help mitigate the issues caused by multicollinearity.

In summary, tolerance and VIF are valuable tools for diagnosing multicollinearity in regression analysis. They help identify the presence of high intercorrelation between independent variables, which can impact the reliability and stability of the regression results.

Where is the US Constitution?

You may want to see also

Generalized variance inflation factor (GVIF)

The generalized variance inflation factor (GVIF) is a measure used to assess collinearity in regression models. Collinearity occurs when two or more variables are highly correlated, leading to instability in regression estimates and high standard errors. It is a concern when conducting regression analysis as it can result in misleading or incorrect results.

The GVIF is typically applied to factors and polynomial variables, which require more than one coefficient and degree of freedom. It is calculated for sets of related regressors, such as a set of dummy regressors. The formula for GVIF is given by GVIF = VIF^[1/(2*df)], where VIF is the usual variance inflation factor, and df is the number of degrees of freedom associated with the term. This adjustment makes the GVIF comparable across dimensions and reduces it to a linear measure.

The interpretation of GVIF values helps identify potential collinearity issues. High GVIF values indicate a higher degree of collinearity. While there are no strict thresholds, values above 10 are often considered indicative of potential collinearity problems. However, it is important to interpret GVIF values relative to other diagnostic tools, such as tolerance, condition index, and variance proportions.

The computation of GVIF can be done using specific functions in statistical software or programming languages, such as the "`vif`" function in the "car" package in R. By applying these tools, researchers can identify collinearity and take appropriate actions, such as removing or transforming variables to improve the stability and accuracy of their regression models.

In summary, the GVIF is a valuable tool for diagnosing collinearity in regression models, particularly when dealing with factors and polynomial variables. By considering GVIF values and interpreting them alongside other diagnostic measures, researchers can make informed decisions to address collinearity and improve the reliability of their statistical analyses.

The Preamble's Purpose: Framing America's Constitutional Vision

You may want to see also

Multicollinearity and misleading statistical results

Multicollinearity is a concept that arises in multiple regression models when there is a high degree of linear intercorrelation between two or more explanatory variables. In simpler terms, multicollinearity occurs when two or more variables on the same regression model are too closely related. This can lead to unstable regression estimates with high standard errors, and ultimately, incorrect results in regression analyses.

Collinearity diagnostics are used to identify multicollinearity. One diagnostic tool is the variance inflation factor (VIF), which measures the inflation in the variances of the parameter estimates due to collinearities among the predictors. A high VIF indicates the presence of multicollinearity. Another tool is the condition index, which is used in conjunction with variance proportions to identify multicollinearity. If two or more variables have high variance proportions (above 0.5 or 0.7) and correspond to high condition indices, this indicates a collinearity problem.

The presence of multicollinearity does not necessarily imply that one of the collinear variables needs to be removed from the regression model. Instead, the multicollinear variables can be combined into a single variable using principal component analysis or factor analysis. This helps to increase the stability of the model while retaining the ability to assess the individual effects of the multicollinear variables.

It is important to note that collinearity or multicollinearity does not need to be exact for its presence to be determined. A strong relationship between variables may be sufficient to indicate significant multicollinearity. Therefore, it is crucial to perform collinearity diagnostics and take appropriate action to address multicollinearity when building regression models to avoid misleading statistical results.

In summary, multicollinearity can lead to misleading statistical results by causing unstable regression estimates and high standard errors. Collinearity diagnostics, such as VIF and condition index analysis, can help identify multicollinearity. By taking appropriate actions, such as combining multicollinear variables or assessing their individual effects, researchers can build more robust regression models and obtain more accurate results.

The Constitution's Journey to Officialdom

You may want to see also

PROC REG and collinearity diagnostics

Collinearity diagnostics are used to determine the presence and strength of collinearity or multicollinearity between variables. Collinearity involves two variables that are near-perfect linear combinations of one another, while multicollinearity involves more than two variables.

PROC REG is a procedure in SAS used for regression analysis, and it includes the COLLIN option for collinearity diagnostics. The COLLIN option in PROC REG includes the intercept term among the variables to be analyzed for collinearity. The COLLINOINT option, on the other hand, excludes the intercept term and centers the data by subtracting the mean of each column in the data matrix.

When interpreting collinearity diagnostics, several measures and thresholds are considered. The variance inflation factor (VIF) is a commonly used diagnostic tool, with a VIF of 1 indicating no inflation and a VIF exceeding 10 indicating serious multicollinearity that requires correction. Additionally, the condition index is examined, with values above 15 warranting further investigation. In the collinearity diagnostics table, pairs of predictors with variance proportion values above 0.8 or 0.7 may indicate collinearity.

It is important to note that collinearity or multicollinearity do not need to be exact to determine their presence. A strong relationship between variables may be sufficient to indicate significant collinearity. In such cases, the coefficient of determination can be calculated, which represents the proportion of variance in a response variable predicted by the regression model built upon the explanatory variables.

To address collinearity, techniques such as dimensionality reduction, principal component regression, and biased estimation methods like ridge regression can be employed. Additionally, variable selection techniques can be used to omit variables from the model, although different methods may select different variables in cases of near collinearity.

Quick-Release Magazines: Mini 14's Tactical Advantage

You may want to see also

Frequently asked questions

Collinearity occurs when two variables are near-perfect linear combinations of one another.

Multicollinearity involves more than two variables and their near-perfect linear combinations.

Multicollinearity leads to unstable regression estimates and high standard errors.

Diagnostic tools include the variance inflation factor (VIF), condition index, condition number, and variance decomposition proportion (VDP).

Large values in collinearity diagnostics proportion of variance are generally considered to be above 0.5, with values above 0.7 or 0.8 also being considered significant in certain cases.