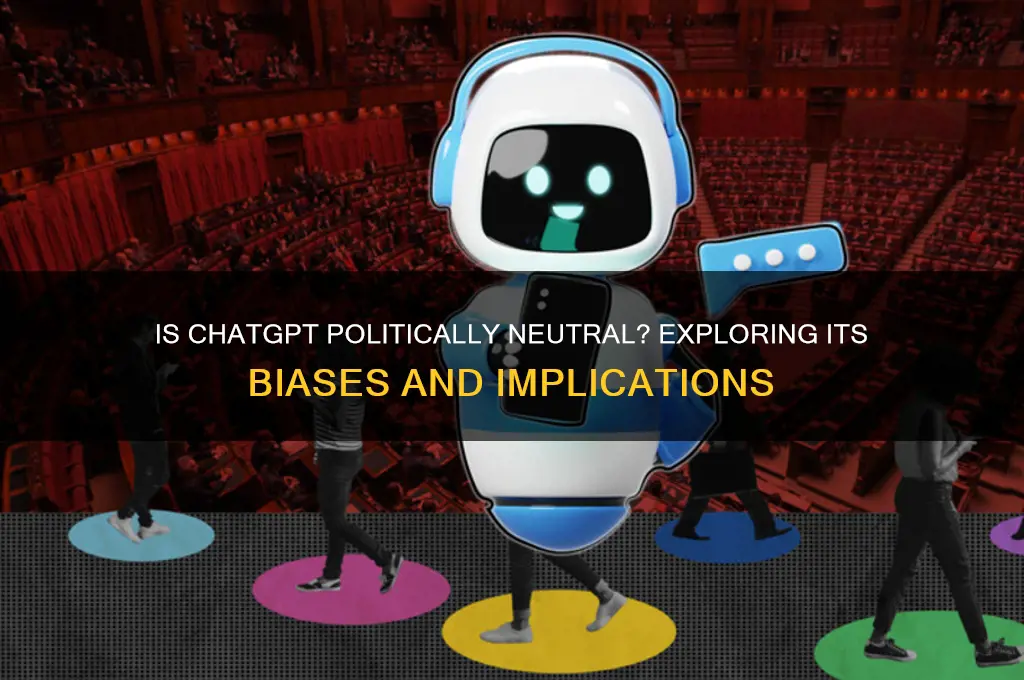

The question of whether Chat GPT is political has sparked considerable debate, as its responses are shaped by the vast and diverse data it was trained on, which inherently includes political perspectives, ideologies, and biases. While OpenAI aims to create a neutral and balanced AI, the model’s outputs can inadvertently reflect the political leanings present in its training material, leading to accusations of bias or alignment with certain viewpoints. Additionally, its design to mimic human-like conversation means it may adopt tones or stances that users perceive as political, even if unintentional. This raises broader concerns about the role of AI in shaping public discourse and the responsibility of developers to ensure fairness and transparency in AI-generated content.

| Characteristics | Values |

|---|---|

| Political Bias | ChatGPT is designed to be neutral, but it can reflect biases present in its training data. It does not hold personal opinions or political affiliations. |

| Training Data | Trained on a diverse dataset up to October 2023, which includes a wide range of political viewpoints, potentially leading to varied responses on political topics. |

| Moderation | OpenAI implements content moderation policies to prevent the generation of harmful or politically extreme content. |

| User Influence | Responses can be influenced by user prompts, allowing users to guide the conversation toward specific political perspectives. |

| Transparency | OpenAI acknowledges limitations and biases in the model, encouraging users to critically evaluate responses. |

| Ethical Use | OpenAI promotes ethical use, discouraging the spread of misinformation or politically manipulative content. |

| Updates | Regular updates aim to improve neutrality and reduce biases, but complete elimination of political leanings is challenging. |

| Context-Aware | Capable of understanding and responding to political contexts, but accuracy depends on the quality of the training data. |

| Global Perspective | Reflects a global dataset, potentially incorporating diverse political ideologies and systems. |

| Limitations | Not a substitute for human political analysis; may generate incorrect or misleading information on complex political issues. |

Explore related products

What You'll Learn

- Bias in Training Data: Examines if ChatGPT's training data contains political biases affecting its responses

- Political Neutrality Claims: Analyzes OpenAI's stance on maintaining political neutrality in ChatGPT's outputs

- Use in Campaigns: Explores how ChatGPT is utilized in political campaigns or messaging

- Censorship Concerns: Discusses if ChatGPT censors or filters politically sensitive topics or views

- Government Regulation: Investigates potential government oversight or restrictions on ChatGPT's political content

Bias in Training Data: Examines if ChatGPT's training data contains political biases affecting its responses

ChatGPT's training data, sourced from the internet, inherently reflects the political leanings, ideologies, and biases of its creators and contributors. This raises a critical question: to what extent do these biases permeate the model's responses, potentially shaping user perceptions and reinforcing existing divides?

A 2023 study by the Stanford Internet Observatory found that large language models, including ChatGPT, can inadvertently amplify political biases present in their training data. For instance, when prompted with politically charged questions, the model may generate responses that align more closely with certain viewpoints, reflecting the dominance of specific narratives in its training corpus.

Consider a scenario where a user asks ChatGPT about the causes of climate change. If the training data disproportionately represents one political perspective, the model might emphasize certain factors while downplaying others, inadvertently influencing the user's understanding of the issue. This highlights the need for transparency in training data selection and the development of techniques to mitigate bias amplification.

Mitigating Bias: A Multi-Pronged Approach

Addressing bias in training data requires a multifaceted strategy. Firstly, data diversification is crucial. Expanding the dataset to include a wider range of sources, perspectives, and political ideologies can help balance the model's output. Secondly, bias detection algorithms can be employed to identify and flag potentially biased content within the training data. These algorithms can analyze text for linguistic patterns, sentiment, and contextual cues associated with specific political leanings.

The Role of Human Oversight

While algorithmic solutions are valuable, human oversight remains essential. Human reviewers can assess the model's responses for bias, particularly in sensitive or controversial topics. This involves establishing clear guidelines for acceptable output and implementing feedback loops where users can report biased or inappropriate responses.

Furthermore, ongoing model evaluation is crucial. Regularly testing ChatGPT's responses to politically charged prompts can help identify emerging biases and inform necessary adjustments to the training data or model architecture.

Ultimately, acknowledging and actively addressing bias in training data is not just a technical challenge but a responsibility. By implementing these measures, we can strive to create AI models that provide more balanced and impartial information, fostering a more informed and nuanced public discourse.

Navigating Workplace Politics: Strategies to Foster a Positive Work Environment

You may want to see also

Political Neutrality Claims: Analyzes OpenAI's stance on maintaining political neutrality in ChatGPT's outputs

OpenAI asserts that ChatGPT is designed to maintain political neutrality, a claim that hinges on its training data and algorithmic constraints. The model is trained on a diverse corpus of internet text, which theoretically balances varying political perspectives. However, the internet itself is not a neutral space; it reflects societal biases, polarizations, and dominant narratives. This raises the question: Can a model trained on inherently biased data truly achieve political neutrality? OpenAI’s approach involves filtering out overtly partisan content and fine-tuning the model to avoid taking stances. Yet, the effectiveness of this method remains under scrutiny, as subtle biases often slip through, particularly in nuanced political discussions.

Consider the practical implications of this neutrality claim. When asked about contentious topics—such as climate change, immigration, or healthcare—ChatGPT often defaults to vague, generalized responses. For instance, instead of endorsing a specific policy, it might list "pros and cons" without contextualizing their political origins. While this avoids direct partisanship, it can inadvertently reinforce the status quo or fail to challenge misinformation. Users seeking clarity on politically charged issues may find these responses unsatisfying, as they lack the depth and specificity needed for informed decision-making. This raises concerns about whether neutrality in AI is a feasible goal or merely a strategic evasion of responsibility.

To evaluate OpenAI’s stance, it’s instructive to compare ChatGPT’s outputs with those of explicitly partisan tools. For example, a right-leaning chatbot might emphasize individual responsibility in economic discussions, while a left-leaning one might stress systemic inequalities. ChatGPT, by contrast, often sidesteps such framing, opting for a middle ground that can feel artificially detached. This approach may appeal to users seeking impartiality but risks oversimplifying complex issues. A practical tip for users is to cross-reference ChatGPT’s responses with multiple sources, ensuring a broader perspective that the model’s neutrality might omit.

Despite OpenAI’s efforts, achieving political neutrality in AI is fraught with challenges. The company’s own biases—whether intentional or not—can influence the model’s design, training, and deployment. For instance, the decision to prioritize certain datasets or the choice of which topics to avoid taking a stance on reflects implicit values. OpenAI’s transparency reports and user feedback mechanisms are steps toward accountability, but they do not fully address the underlying issue. A persuasive argument could be made that true neutrality is unattainable in a politically charged world, and OpenAI should instead focus on disclosing biases and empowering users to critically engage with the model’s outputs.

In conclusion, OpenAI’s claim of political neutrality in ChatGPT is a commendable goal but one that faces significant practical and ethical hurdles. The model’s reliance on biased data, its tendency to oversimplify complex issues, and the inherent challenges of defining neutrality in a polarized society all undermine this claim. Users must approach ChatGPT’s outputs with a critical eye, recognizing that even the most well-intentioned AI cannot escape the political context in which it operates. OpenAI’s ongoing efforts to refine its approach are necessary but insufficient without a broader acknowledgment of the limitations of neutrality in AI.

Capitalism's Dual Nature: Economic System or Political Power Structure?

You may want to see also

Use in Campaigns: Explores how ChatGPT is utilized in political campaigns or messaging

Political campaigns are increasingly leveraging ChatGPT to craft personalized messages at scale, a tactic that blends efficiency with a human-like touch. For instance, during the 2024 U.S. midterms, local candidates used the tool to generate tailored emails addressing constituents by name, referencing their voting history, and aligning campaign promises with their specific concerns. This level of customization, once labor-intensive, now takes minutes, allowing campaigns to allocate resources to strategy rather than rote tasks. However, the risk lies in over-personalization, where voters may perceive messages as manipulative rather than genuine, underscoring the need for ethical boundaries in AI-driven outreach.

Instructive in nature, ChatGPT’s role extends to drafting social media posts, press releases, and even debate talking points. Campaigns input key themes—say, healthcare reform or climate policy—and receive polished, audience-specific content within seconds. For example, a campaign targeting younger voters might use the tool to frame policy in terms of TikTok trends or meme culture, while messages for older demographics emphasize tradition and stability. The challenge? Ensuring the AI’s output aligns with the candidate’s authentic voice, as generic or contradictory messaging can backfire. Campaigns must pair AI efficiency with human oversight to maintain credibility.

Persuasively, ChatGPT is also employed in opposition research, analyzing vast datasets to identify vulnerabilities in opponents’ records or statements. By feeding the tool transcripts of past speeches or policy papers, campaigns can swiftly generate counterarguments or highlight inconsistencies. During a 2023 mayoral race in Chicago, one candidate’s team used ChatGPT to dissect an opponent’s ambiguous stance on public transit funding, crafting a rebuttal that resonated with commuters. Yet, this application raises ethical questions: Is AI-driven scrutiny fair when it amplifies minor missteps into major controversies? Campaigns must balance strategic advantage with the responsibility of truthful discourse.

Comparatively, ChatGPT’s use in campaigns mirrors the evolution of political advertising from print to television to digital platforms. Just as TV ads revolutionized messaging in the 1960s, AI tools are reshaping how campaigns engage voters today. However, unlike static mediums, ChatGPT enables real-time adaptation—a candidate’s team can tweak messaging mid-campaign based on voter feedback or breaking news. For example, during a sudden economic downturn, a campaign might pivot its AI-generated content to emphasize job creation over tax cuts. This agility is unprecedented but demands rigorous monitoring to avoid missteps in tone or accuracy.

Descriptively, the integration of ChatGPT into campaigns is not without pitfalls. In one instance, a state senate candidate’s AI-drafted newsletter mistakenly referenced a local event that had been canceled months prior, leading to accusations of detachment from community issues. Such errors highlight the tool’s limitations: it lacks real-time awareness and relies on the data it’s fed. Campaigns must treat ChatGPT as a collaborator, not a replacement for human insight. Practical tips include fact-checking all AI-generated content, limiting its use to non-critical communications, and training staff to recognize when the tool’s output feels inauthentic. As AI becomes a campaign staple, the key is harnessing its power while preserving the human element that voters trust.

Mastering Political Debates: Strategies to Win Arguments Effectively

You may want to see also

Explore related products

Censorship Concerns: Discusses if ChatGPT censors or filters politically sensitive topics or views

ChatGPT, like many AI systems, operates within a framework designed to balance utility and responsibility. One of its core mechanisms is content filtering, which raises questions about whether it censors politically sensitive topics or views. OpenAI, the developer, has stated that ChatGPT is programmed to avoid generating harmful, biased, or controversial content. This includes steering clear of politically charged discussions that could incite division or misinformation. However, the line between responsible moderation and censorship is often blurred, leaving users to wonder if certain perspectives are being systematically excluded.

Consider a practical example: if a user asks ChatGPT to discuss a contentious political event, the response is likely to be neutral, fact-based, and devoid of opinionated language. While this approach minimizes the risk of spreading misinformation, it also limits the depth of analysis. For instance, a query about a government policy might yield a summary of its stated objectives without exploring criticisms or alternative viewpoints. This raises the question: is ChatGPT censoring dissenting opinions, or is it simply adhering to its design principles of impartiality and safety?

From an analytical standpoint, the filtering mechanisms in ChatGPT are not inherently political but are instead rooted in risk mitigation. OpenAI’s guidelines prioritize avoiding harm over fostering debate, which can inadvertently silence nuanced discussions. For example, discussions about historical events with ongoing political implications, such as colonialism or civil rights movements, may be sanitized to avoid controversy. While this protects the platform from misuse, it also limits its ability to engage with complex, politically charged topics in a meaningful way.

To navigate this issue, users should approach ChatGPT with an awareness of its limitations. For politically sensitive topics, supplement its responses with external sources to gain a fuller perspective. Additionally, OpenAI could enhance transparency by clearly outlining which topics are filtered and why. This would allow users to understand the boundaries of the tool and make informed decisions about its use. Ultimately, while ChatGPT’s filtering is not inherently political, its impact on discourse warrants scrutiny and ongoing dialogue.

Amazon's Political Constraints: How Policies Shape Its Global Expansion

You may want to see also

Government Regulation: Investigates potential government oversight or restrictions on ChatGPT's political content

As AI systems like ChatGPT increasingly influence public discourse, governments worldwide are grappling with how to regulate their political content. The challenge lies in balancing the need for transparency, accountability, and prevention of harm without stifling innovation or free expression. This delicate equilibrium requires a nuanced approach, considering both the capabilities of AI and the societal implications of its output.

The Case for Regulation:

Proponents of government oversight argue that AI-generated political content poses unique risks. Unlike human-authored material, AI can rapidly disseminate information, potentially amplifying misinformation, bias, or manipulative narratives. For instance, a study by the University of Washington found that AI-generated political ads were more effective at swaying opinions than human-written ones, raising concerns about unfair influence in elections. Furthermore, the opacity of AI decision-making processes makes it difficult to hold developers accountable for harmful outputs.

Potential Regulatory Frameworks:

Governments could employ various strategies to regulate ChatGPT's political content. One approach is to mandate transparency, requiring developers to disclose when AI generates political material and provide explanations for its reasoning. Another strategy involves establishing content guidelines, prohibiting AI from producing hate speech, disinformation, or other harmful content. For example, the European Union's proposed Artificial Intelligence Act includes provisions for high-risk AI systems, such as those used in political campaigning, to undergo rigorous testing and certification.

Challenges and Cautions:

Implementing effective regulations requires careful consideration of potential pitfalls. Overly restrictive measures could stifle innovation, limiting the development of beneficial AI applications. Moreover, defining political content is complex, as it often overlaps with other domains like news, entertainment, and personal expression. Regulators must also address the global nature of AI, ensuring that rules are harmonized across jurisdictions to prevent regulatory arbitrage. A collaborative approach involving governments, developers, and civil society is essential to strike the right balance.

Practical Considerations:

When crafting regulations, policymakers should focus on age-appropriate safeguards, as younger users may be more susceptible to AI-generated political influence. For instance, regulations could require AI systems to incorporate age verification mechanisms or provide tailored content filters for different age groups. Additionally, governments should invest in digital literacy programs, empowering citizens to critically evaluate AI-generated information. By combining regulatory measures with education and awareness initiatives, societies can harness the benefits of AI while mitigating its risks in the political sphere.

Ending Political Bosses: Strategies to Dismantle Corrupt Power Structures

You may want to see also

Frequently asked questions

No, Chat GPT is not inherently political. It is an AI language model trained on a wide range of data and designed to generate responses based on patterns in that data. Its responses are neutral unless explicitly programmed or biased by its training material.

Chat GPT does not hold personal opinions or beliefs. It generates responses based on patterns in its training data, which may include political viewpoints. However, it aims to remain neutral and provide balanced information when discussing political topics.

Chat GPT is designed to be unbiased and does not favor any political party or ideology. Its responses reflect the diversity of perspectives in its training data, though it may inadvertently lean toward more commonly represented views.

While Chat GPT can generate text on any topic, including political ones, it is not designed for propaganda. Its developers have implemented safeguards to prevent misuse, and it is intended for informative and constructive purposes.

Chat GPT is programmed to approach politically sensitive topics with caution, aiming to provide factual and neutral information. It may avoid taking sides or making definitive statements to maintain impartiality, though its effectiveness depends on its training and guidelines.