Political parties increasingly leverage social engineering as a strategic tool to shape public opinion, manipulate voter behavior, and consolidate power. By employing psychological tactics, data-driven algorithms, and targeted messaging, parties exploit societal divisions, amplify emotional triggers, and create echo chambers to influence perceptions. Through the dissemination of misinformation, the curation of personalized narratives, and the manipulation of online platforms, they engineer consent and foster polarization, often at the expense of factual accuracy and democratic integrity. This practice not only undermines informed decision-making but also raises ethical concerns about the erosion of trust in institutions and the manipulation of vulnerable populations for political gain. Understanding these mechanisms is crucial to safeguarding democratic processes and fostering a more transparent and accountable political landscape.

Explore related products

$17.92 $18.99

What You'll Learn

- Manipulating Public Opinion: Using targeted messaging and misinformation to shape voter beliefs and attitudes

- Polarizing Society: Exploiting divisions to create loyal bases and weaken opposition support

- Algorithmic Targeting: Leveraging data analytics to micro-target voters with personalized political ads

- Fearmongering Tactics: Amplifying threats to rally support and justify extreme policies

- Astroturfing Campaigns: Creating fake grassroots movements to appear widely supported

Manipulating Public Opinion: Using targeted messaging and misinformation to shape voter beliefs and attitudes

Political parties have long understood the power of messaging to sway public opinion, but the digital age has supercharged their ability to manipulate voter beliefs with surgical precision. Through micro-targeting, parties can now deliver tailored messages to specific demographics, exploiting psychological vulnerabilities and reinforcing existing biases. For instance, during the 2016 U.S. presidential election, the Cambridge Analytica scandal revealed how data harvested from Facebook profiles was used to craft personalized ads designed to inflame fears or stoke divisions among voters. This strategy doesn’t just inform—it manipulates, often bypassing rational thought by appealing directly to emotions like fear, anger, or hope.

Misinformation compounds this effect, acting as a weapon to distort reality and shape narratives. False or misleading claims, when repeated consistently, can harden into perceived truths, especially when they align with a voter’s preexisting worldview. For example, studies show that repeated exposure to misinformation about election fraud can erode trust in democratic institutions, even among those who initially doubted such claims. Political operatives exploit this cognitive phenomenon by flooding social media platforms with unverified or fabricated content, often disguised as legitimate news. The result? A fragmented public, increasingly polarized and less capable of discerning fact from fiction.

To combat this manipulation, voters must adopt a critical mindset when consuming political content. Start by verifying the source of information—is it a reputable news outlet, or a partisan blog? Cross-reference claims with multiple sources, and be wary of emotionally charged language designed to provoke an immediate reaction. Tools like fact-checking websites (e.g., Snopes, PolitiFact) can help debunk falsehoods, but their effectiveness depends on users actively seeking them out. Additionally, limiting exposure to echo chambers by diversifying media consumption can reduce the impact of targeted messaging.

A comparative analysis of successful counter-campaigns offers further insights. In Taiwan, the government launched a civic education program to teach digital literacy, empowering citizens to identify and resist disinformation. Similarly, during the 2020 U.S. elections, nonpartisan groups ran campaigns encouraging voters to “pause before sharing” unverified content. These initiatives demonstrate that while technology enables manipulation, it can also be harnessed to foster resilience. The takeaway? Public opinion isn’t immutable—it’s a battleground where awareness, education, and critical thinking can neutralize even the most sophisticated attempts at manipulation.

Washington's Warning: The Dangers of Political Factions in America

You may want to see also

Polarizing Society: Exploiting divisions to create loyal bases and weaken opposition support

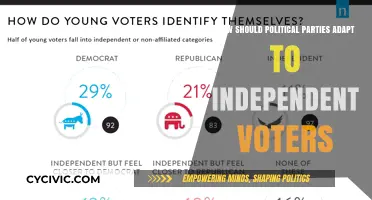

Political parties often exploit societal divisions to solidify their bases and erode support for opponents. By amplifying existing fault lines—racial, economic, cultural, or ideological—they create an "us vs. them" narrative that fosters loyalty among followers while demonizing adversaries. This strategy, though effective, risks destabilizing democracies by prioritizing partisan gain over national unity.

Consider the tactical use of social media algorithms. Parties craft targeted messages that resonate with specific demographics, reinforcing pre-existing beliefs and stoking outrage. For instance, a party might highlight immigration as a threat to jobs in economically struggling regions, while simultaneously portraying opponents as indifferent to local struggles. This dual approach not only galvanizes supporters but also discourages moderate voters from aligning with the opposition, as their positions are framed as extreme or harmful.

A cautionary example lies in countries where polarization has led to political violence. In such cases, parties deliberately escalate rhetoric, labeling opponents as enemies of the state or traitors. This dehumanization weakens democratic institutions by making compromise seem unpatriotic or even dangerous. For instance, in deeply polarized societies, even factual information from neutral sources is dismissed as "fake news" if it contradicts partisan narratives, further entrenching divisions.

To counter this, individuals must actively seek diverse perspectives and question the intent behind polarizing messages. Practical steps include following fact-checking organizations, engaging in cross-partisan dialogues, and limiting exposure to echo chambers. Parties thrive on division, but informed citizens can disrupt the cycle by prioritizing shared values over partisan loyalty. Ultimately, recognizing the manipulation behind polarization is the first step toward rebuilding a cohesive society.

Uniting Ideologies: Exploring the Core Connections of Political Parties

You may want to see also

Algorithmic Targeting: Leveraging data analytics to micro-target voters with personalized political ads

Political campaigns have evolved from broad, one-size-fits-all messaging to precision-guided persuasion machines. At the heart of this transformation lies algorithmic targeting, a strategy that leverages data analytics to micro-target voters with personalized political ads. By analyzing vast datasets—ranging from voting histories and social media activity to consumer behavior and geolocation—campaigns craft messages tailored to individual preferences, fears, and aspirations. This approach maximizes engagement by speaking directly to the recipient’s worldview, often bypassing critical thinking and fostering emotional resonance.

Consider the mechanics: a voter in a suburban district might receive ads emphasizing tax cuts and local infrastructure, while a college student in an urban area sees messages about student loan forgiveness and climate action. These ads aren’t random; they’re the result of algorithms identifying patterns in behavior and demographics. For instance, a 2016 study revealed that during the U.S. presidential election, targeted Facebook ads were used to discourage African American voters from participating, highlighting how granular data can be weaponized. The takeaway? Algorithmic targeting isn’t just about persuasion—it’s about manipulation, often exploiting divisions rather than fostering unity.

Implementing this strategy requires a multi-step process. First, campaigns collect data from public records, social media platforms, and third-party vendors. Next, they segment voters into hyper-specific groups based on psychographics, such as "security-focused seniors" or "environmentally conscious millennials." Finally, they deploy tailored ads across digital channels, optimizing in real-time based on engagement metrics. However, this approach isn’t without risks. Over-personalization can lead to echo chambers, where voters are exposed only to information reinforcing their existing beliefs, deepening polarization.

To mitigate these risks, campaigns must balance precision with responsibility. Practical tips include conducting regular audits of data sources to ensure accuracy and transparency, limiting the use of sensitive personal information, and incorporating diverse perspectives in ad creation. Voters, meanwhile, can protect themselves by diversifying their information sources, using ad-blockers, and regularly reviewing privacy settings on social media platforms. While algorithmic targeting is a powerful tool, its ethical deployment hinges on accountability and respect for democratic values.

In comparison to traditional mass advertising, algorithmic targeting offers unparalleled efficiency but at a cost. Mass ads cast a wide net, appealing to general sentiments but often missing the mark. Micro-targeted ads, however, are like surgical strikes, delivering messages with laser precision. Yet, this precision can erode trust if voters feel manipulated or if campaigns prioritize victory over truth. The challenge lies in harnessing the benefits of data-driven campaigning without undermining the integrity of the electoral process. As technology advances, so must our vigilance in ensuring it serves democracy, not divides it.

Exploring Panama's Political Landscape: Parties, Ideologies, and Influence

You may want to see also

Explore related products

$13.99 $14.95

Fearmongering Tactics: Amplifying threats to rally support and justify extreme policies

Fearmongering is a potent tool in the arsenal of political parties seeking to manipulate public sentiment. By amplifying perceived threats—whether real, exaggerated, or entirely fabricated—politicians can create an atmosphere of urgency that compels voters to rally behind their agenda. This tactic often involves framing issues in stark, binary terms: "us versus them," "safety versus chaos," or "patriotism versus betrayal." For instance, during election seasons, parties might highlight crime statistics, immigration concerns, or economic downturns as existential crises, even if the data is cherry-picked or taken out of context. The goal is to trigger primal emotions like fear and anger, which cloud rational judgment and make extreme policies seem justified.

Consider the playbook of authoritarian regimes, where fearmongering is used to consolidate power. Leaders often portray external enemies or internal dissenters as imminent dangers to national security or cultural identity. In democratic contexts, this strategy is subtler but no less effective. For example, a political party might warn of a "wave of illegal immigrants" threatening jobs and cultural values, even if migration rates are stable or declining. By repeatedly linking these threats to specific policies—such as border walls or restrictive immigration laws—the party positions itself as the sole protector of the public’s well-being. The takeaway here is clear: fearmongering works because it exploits human psychology, bypassing logic in favor of emotional response.

To counter fearmongering, voters must adopt a critical mindset. Start by questioning the source and credibility of the information presented. Are the statistics cited from reputable organizations, or are they cherry-picked to support a narrative? Cross-reference claims with multiple sources, including non-partisan fact-checking websites. Additionally, examine the language used: is it inflammatory, divisive, or overly simplistic? Politicians relying on fearmongering often avoid nuance, preferring broad strokes that leave little room for debate. By recognizing these patterns, individuals can inoculate themselves against manipulation and make informed decisions.

A comparative analysis reveals that fearmongering is not limited to any one political ideology. Both left-leaning and right-leaning parties have employed this tactic, though the threats they amplify differ. For instance, while one party might focus on economic inequality or climate catastrophe, another might emphasize law and order or national sovereignty. The common thread is the use of fear as a motivator. However, the effectiveness of this strategy varies depending on the audience’s pre-existing beliefs and vulnerabilities. Younger voters, for example, may be more skeptical of alarmist claims, while older demographics might be more susceptible due to concerns about stability and change. Understanding these dynamics can help individuals identify when fear is being weaponized to sway opinions.

Finally, fearmongering has real-world consequences that extend beyond election cycles. Policies justified by exaggerated threats often lead to overreach, whether in the form of draconian laws, increased surveillance, or the erosion of civil liberties. For instance, post-9/11 fearmongering about terrorism led to the passage of the Patriot Act, which critics argue infringed on privacy rights. Similarly, fear-driven immigration policies can result in family separations and humanitarian crises. The long-term takeaway is that while fearmongering may yield short-term political gains, its societal costs are profound and lasting. Voters must weigh these implications carefully, recognizing that the price of security should never be the sacrifice of freedom or compassion.

The Middle Ground: Navigating Political Centrism in a Polarized World

You may want to see also

Astroturfing Campaigns: Creating fake grassroots movements to appear widely supported

Astroturfing campaigns are the political equivalent of a mirage—they create the illusion of widespread public support where none exists. Unlike genuine grassroots movements, which emerge organically from the collective will of citizens, astroturfing is a manufactured spectacle. Political parties, corporations, or interest groups orchestrate these campaigns by employing paid actors, bots, or fake accounts to amplify a specific narrative. For instance, during the 2016 U.S. presidential election, researchers identified thousands of bots tweeting pro-Trump hashtags, creating the false impression of a groundswell of public enthusiasm. This tactic exploits the psychological tendency to follow the crowd, making it a powerful tool for shaping public opinion.

To execute an astroturfing campaign, follow these steps: first, identify the target audience and the desired narrative. Next, create or co-opt existing social media accounts to disseminate the message. Use bots or paid individuals to generate volume, ensuring the message appears trending or widely supported. Finally, amplify the campaign through traditional media outlets by framing it as a grassroots movement. However, proceed with caution—modern analytics tools can detect inauthentic activity, and exposure can backfire, damaging credibility. For example, Facebook’s takedown of fake accounts linked to political campaigns in 2020 highlighted the growing risks of such tactics.

The persuasive power of astroturfing lies in its ability to manipulate perception. By flooding social media with coordinated messages, it creates an echo chamber that drowns out dissenting voices. This is particularly effective in polarizing environments, where audiences are more likely to accept information that aligns with their beliefs. A study by the University of Oxford found that 70% of astroturfing campaigns aim to discredit opponents rather than promote a positive agenda. To counter this, individuals should verify the authenticity of online movements by checking the source of posts, analyzing account histories, and cross-referencing with trusted news outlets.

Comparatively, astroturfing differs from lobbying or advertising in its deceptive nature. While lobbying is transparent and advertising is openly promotional, astroturfing masquerades as genuine public sentiment. This distinction makes it ethically questionable and often illegal in certain contexts. For instance, the U.S. Federal Trade Commission has cracked down on companies using fake reviews to boost products, a practice akin to political astroturfing. Despite its risks, the tactic persists because it is cost-effective and difficult to trace, making it a tempting strategy for entities seeking to sway public opinion without direct accountability.

In conclusion, astroturfing campaigns are a sophisticated form of social engineering that undermines democratic processes by distorting public discourse. While they may achieve short-term goals, their long-term consequences include eroding trust in institutions and media. As technology advances, so too will the methods for detecting and combating these campaigns. For now, vigilance and critical thinking remain the best defenses against this insidious manipulation.

Exploring South Africa's Diverse Political Landscape: Parties and Representation

You may want to see also

Frequently asked questions

Social engineering in politics refers to the strategic manipulation of societal attitudes, behaviors, and beliefs to achieve political goals. Parties use tactics like framing issues, controlling narratives, and leveraging emotions to influence public opinion and voter behavior.

Political parties use social media to disseminate targeted messages, create echo chambers, and amplify specific narratives. They employ algorithms, bots, and personalized ads to shape public perception, often polarizing audiences to solidify support or discredit opponents.

Fear is a powerful tool in political social engineering. Parties often highlight threats (real or perceived) to mobilize voters, create divisions, or justify policies. This tactic exploits human psychology to drive emotional responses and influence decision-making.

Yes, education can help individuals recognize manipulative tactics by fostering critical thinking and media literacy. Educated citizens are better equipped to analyze information, identify biases, and resist attempts to engineer their beliefs or behaviors.

Yes, ethical concerns arise when social engineering undermines democratic principles, such as informed consent, transparency, and fairness. Manipulative tactics can distort public discourse, suppress dissent, and erode trust in institutions, raising questions about their legitimacy.