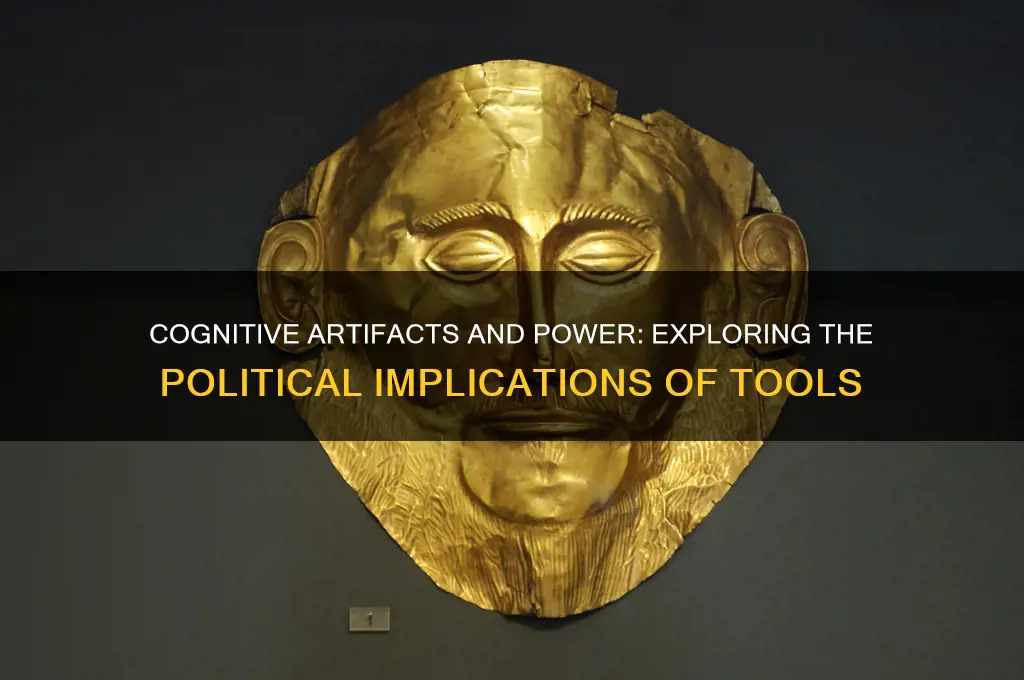

The question of whether cognitive artifacts—tools, technologies, and systems that extend or shape human cognition—have politics is a provocative and increasingly relevant inquiry in the digital age. Drawing on Langdon Winner’s concept that technologies embody political values, this topic explores how cognitive artifacts, such as algorithms, user interfaces, or even language models, are not neutral instruments but carry embedded assumptions, biases, and power structures. For instance, the design of a search engine algorithm may prioritize certain perspectives over others, or a language model might perpetuate stereotypes, reflecting the values and priorities of their creators. By examining these artifacts through a political lens, we uncover how they influence thought, behavior, and societal outcomes, raising critical questions about equity, accountability, and the democratization of cognitive tools in an increasingly interconnected world.

| Characteristics | Values |

|---|---|

| Embedded Values | Cognitive artifacts (e.g., algorithms, tools, interfaces) reflect the values, biases, and assumptions of their creators, often invisibly shaping user behavior and outcomes. |

| Power Asymmetries | They can reinforce or challenge existing power structures by privileging certain perspectives, excluding others, or amplifying inequalities. |

| Political Agency | Artifacts are not neutral; they actively participate in shaping social and political realities, often with unintended consequences. |

| Normalization of Practices | They normalize certain ways of thinking, acting, or organizing, which can become politically significant over time. |

| Opacity and Accountability | Many cognitive artifacts (e.g., AI systems) are opaque, making it difficult to scrutinize their political implications or hold creators accountable. |

| Scalability and Reach | Their widespread use can amplify political effects, influencing large populations and societal norms. |

| Historical and Cultural Context | Artifacts are shaped by and shape historical and cultural contexts, embedding political ideologies and practices. |

| Resistance and Subversion | Users can resist or subvert the intended political effects of artifacts, creating new meanings or uses. |

| Design as Political Act | The design of cognitive artifacts is inherently political, as choices about functionality, accessibility, and usability reflect value judgments. |

| Ethical and Social Implications | Their deployment raises ethical questions about fairness, justice, and the distribution of benefits and harms in society. |

Explore related products

What You'll Learn

- Design Bias in Tools: How tool design reflects and reinforces societal biases and power structures

- Access and Exclusion: Who can access cognitive artifacts and who is marginalized by their design

- Cultural Framing: How artifacts shape cultural norms and perspectives through their functionality

- Power Dynamics: The role of artifacts in maintaining or challenging existing power hierarchies

- Ethical Implications: Moral responsibilities in designing artifacts that influence thought and behavior

Design Bias in Tools: How tool design reflects and reinforces societal biases and power structures

Tools are not neutral. Every design choice, from the shape of a screwdriver handle to the algorithms powering search engines, encodes assumptions about the user and the world they inhabit. These assumptions, often invisible to the untrained eye, can perpetuate and amplify existing societal biases and power structures. Consider the QWERTY keyboard layout, designed not for efficiency but to prevent typewriter jams in the 19th century. This layout, now ubiquitous, subtly influences typing speed and comfort, potentially disadvantaging users with smaller hands, a bias rooted in a bygone era but still felt today.

This example illustrates a key principle: design bias in tools is often historical, baked into the very fabric of the object through repeated use and standardization.

Let's take a more contemporary example: facial recognition software. Studies have shown that many algorithms exhibit racial and gender biases, misidentifying people of color and women at significantly higher rates than white men. This isn't a bug; it's a reflection of the data used to train these algorithms, which often overrepresent lighter-skinned individuals. The consequence? Biased tools used in law enforcement or hiring practices can lead to discriminatory outcomes, further marginalizing already vulnerable communities.

This highlights another facet of design bias: it's not just about the physical form of a tool, but also the data and algorithms that power it.

Design bias isn't always intentional, but its impact can be profound. A seemingly innocuous design choice, like the default settings on a voice assistant, can reinforce gender stereotypes. Most voice assistants are programmed with female voices, perpetuating the association of women with servitude and obedience. While this may seem trivial, it contributes to a larger cultural narrative that influences how we perceive and interact with technology and each other.

Combating design bias requires a multi-pronged approach. Firstly, designers must be aware of their own biases and actively seek diverse perspectives throughout the design process. This includes involving users from underrepresented groups in testing and feedback sessions. Secondly, we need to develop more transparent and accountable design practices, making the decision-making process behind tool design more visible and open to scrutiny. Finally, we need to foster a culture of critical thinking about technology, encouraging users to question the assumptions embedded in the tools they use and demand more equitable designs.

Is It a Political Hoax? Unraveling the Truth Behind the Claims

You may want to see also

Access and Exclusion: Who can access cognitive artifacts and who is marginalized by their design

Cognitive artifacts—tools that extend human cognition, such as smartphones, algorithms, or even language itself—are not neutral. Their design inherently shapes who can use them effectively and who is left behind. Consider the smartphone, a ubiquitous cognitive artifact. While it grants access to vast information and connectivity, its design often excludes those with visual impairments, limited literacy, or financial constraints. For instance, touchscreen interfaces rely heavily on visual and tactile interaction, marginalizing users with motor disabilities. Similarly, the cost of high-end devices and data plans creates a digital divide, disproportionately affecting low-income communities. This exclusion is not accidental; it is embedded in the artifact’s design, reflecting the priorities and biases of its creators.

To illustrate further, take the example of language translation apps, which serve as cognitive artifacts for cross-cultural communication. While they claim universality, these tools often prioritize widely spoken languages like English, Spanish, or Mandarin, leaving lesser-known languages underrepresented or entirely absent. This design choice reinforces linguistic hierarchies, marginalizing speakers of indigenous or minority languages. Even when these languages are included, the accuracy of translation can vary dramatically, further disadvantaging users who rely on these tools for essential communication. The takeaway here is clear: cognitive artifacts are not inherently democratic; their inclusivity is a deliberate choice, not a default setting.

Designing for accessibility requires a proactive approach, one that goes beyond compliance with legal standards. For instance, developers can incorporate features like voice-to-text, haptic feedback, and multilingual support to broaden access. However, this is not merely a technical challenge but a political one. Prioritizing accessibility often means diverting resources from features that cater to the majority, a decision that can be unpopular with stakeholders. For example, adding sign language interpretation to video conferencing platforms benefits a relatively small user base but requires significant investment. Such decisions highlight the political nature of cognitive artifacts: they reflect whose needs are valued and whose are deemed expendable.

A comparative analysis of cognitive artifacts in education reveals further disparities. Learning management systems (LMS) like Canvas or Blackboard are designed to streamline education but often exclude students with unreliable internet access or outdated devices. Similarly, AI-driven tutoring tools, while promising personalized learning, can reinforce biases if trained on non-representative datasets. For instance, an AI tutor might struggle to assist students from non-traditional educational backgrounds, perpetuating existing inequalities. To counter this, designers must adopt a "margins-to-center" approach, where the needs of the most marginalized users inform the core design, ensuring that no one is left behind.

In conclusion, the politics of cognitive artifacts are deeply intertwined with their design and accessibility. By examining who can access these tools and who is excluded, we uncover the biases and priorities embedded in their creation. Practical steps, such as inclusive design principles and equitable resource allocation, can mitigate exclusion, but they require a fundamental shift in how we approach technology. Cognitive artifacts are not just tools; they are reflections of societal values. To create a more just and inclusive world, we must ensure that these artifacts serve everyone, not just the privileged few.

COVID-19: Political Maneuver or Global Health Crisis?

You may want to see also

Cultural Framing: How artifacts shape cultural norms and perspectives through their functionality

Cognitive artifacts, from the abacus to the smartphone, are not neutral tools. Their design, functionality, and even their absence, subtly shape the way we think, interact, and understand the world. This is the essence of cultural framing: the process by which artifacts embed and perpetuate specific cultural norms and perspectives. Consider the QWERTY keyboard layout, a design originally intended to prevent typewriter jams. Today, it influences how we learn to type, the speed at which we communicate, and even the languages that adapt to its constraints. This example illustrates how functionality—seemingly mundane and technical—becomes a powerful force in shaping cultural practices.

To understand this dynamic, examine the role of social media platforms as modern cognitive artifacts. Algorithms prioritize engagement, often amplifying sensational or polarizing content. This functionality frames our perception of reality, emphasizing conflict over consensus and immediacy over depth. For instance, a study found that users aged 18–24 spend an average of 3 hours daily on social media, during which they are exposed to curated narratives that reinforce specific cultural values, such as individualism or consumerism. The takeaway here is clear: the functionality of these platforms is not just a tool for connection but a lens through which we interpret and engage with society.

A comparative analysis of navigation tools further highlights this point. Traditional maps, with their fixed scales and orientations, encourage a spatial understanding centered on north as "up." In contrast, GPS systems, with their dynamic, user-centered perspectives, shift focus to the individual’s immediate path. This change in functionality alters not just how we navigate but also how we conceptualize space and our place within it. For older generations accustomed to maps, this shift may feel disorienting, while younger users, having grown up with GPS, may find traditional maps cumbersome. The lesson? Artifacts do not merely reflect culture; they actively reshape it through their design and use.

To mitigate the unintended consequences of cultural framing, designers and users must adopt a critical approach. For instance, educators can incorporate media literacy programs for adolescents (ages 13–18) to dissect how platforms like Instagram or TikTok frame beauty standards or political discourse. Similarly, product designers can prioritize inclusive functionality, such as multilingual interfaces or accessibility features, to challenge dominant cultural narratives. A practical tip: when evaluating a new tool, ask not just "What does it do?" but "What does it assume about the world, and how might it change my perspective?" By doing so, we can harness the power of cognitive artifacts to foster diversity rather than conformity.

Ultimately, cultural framing is a double-edged sword. While artifacts can entrench harmful norms, they also offer opportunities for transformation. The functionality of a tool is never apolitical; it carries the values, biases, and intentions of its creators. Recognizing this allows us to use artifacts more consciously, ensuring they serve as bridges to understanding rather than barriers to it. Whether designing a new app or teaching a child to read a map, the choices we make today will shape the cultural landscapes of tomorrow.

Fences and Politics: Exploring August Wilson's Critique of Racial Injustice

You may want to see also

Explore related products

Power Dynamics: The role of artifacts in maintaining or challenging existing power hierarchies

Cognitive artifacts—tools that extend our mental capabilities, from calendars to algorithms—are not neutral. They embed the values, biases, and intentions of their creators, often reinforcing or subverting power structures in subtle yet profound ways. Consider the design of standardized tests, which historically favor certain cultural and linguistic norms, systematically disadvantaging marginalized groups. These artifacts don’t merely reflect societal hierarchies; they actively participate in their maintenance, often under the guise of objectivity.

To challenge these dynamics, start by interrogating the origins and assumptions of the artifacts you use. For instance, a workplace productivity app might prioritize metrics like "efficiency" that devalue rest or creativity, reinforcing a capitalist work ethic. To counteract this, adopt a practice of "artifact auditing": examine how tools allocate resources, distribute attention, or measure success. Ask, *Whose interests does this serve?* and *What alternatives could center equity?* This critical lens transforms passive users into active participants in reshaping power.

A persuasive case study is the design of urban navigation apps, which often route users through wealthier neighborhoods while avoiding "unsafe" areas—a label frequently rooted in racial bias. Such algorithms don’t just describe the world; they prescribe it, reinforcing spatial segregation. To disrupt this, advocate for participatory design processes that include voices from marginalized communities. For example, initiatives like *Digital Harlem* involve residents in mapping their own neighborhoods, reclaiming narratives and challenging dominant representations.

Finally, consider the comparative impact of open-source versus proprietary artifacts. Open-source tools, like Wikipedia or Linux, democratize access and allow users to modify them, fostering collective agency. Proprietary systems, in contrast, often lock users into predetermined frameworks, limiting their ability to challenge embedded biases. By choosing or creating open tools, individuals and communities can redistribute power, turning artifacts from instruments of control into platforms for liberation.

In practice, this means prioritizing transparency, inclusivity, and adaptability in artifact design. For educators, this could involve using modular learning platforms that students can customize. For policymakers, it might mean mandating bias audits for public-facing algorithms. The takeaway is clear: cognitive artifacts are not just tools—they are terrains of struggle. By engaging with them critically, we can shift the balance of power, one design decision at a time.

Is the Left's Political Leaning Truly Democratic? A Critical Analysis

You may want to see also

Ethical Implications: Moral responsibilities in designing artifacts that influence thought and behavior

Cognitive artifacts—tools, technologies, and systems that shape how we think and act—are not neutral. From search algorithms to social media interfaces, these designs embed values and biases that subtly influence users. Designers, therefore, bear a moral responsibility to anticipate and mitigate the ethical consequences of their creations. For instance, a recommendation algorithm that prioritizes engagement over accuracy can amplify misinformation, while a fitness app that sets unrealistic goals may harm mental health. Recognizing this power is the first step toward ethical design.

Consider the case of educational software tailored for children aged 8–12. If the program reinforces gender stereotypes by assigning math problems to boys and language tasks to girls, it perpetuates harmful biases during a critical developmental stage. Designers must actively challenge such defaults, ensuring artifacts promote inclusivity and equity. Practical steps include conducting bias audits, involving diverse user groups in testing, and setting clear ethical guidelines for data use. Transparency in design choices—such as disclosing how algorithms make decisions—empowers users to make informed choices.

Persuasive design, often used in health apps or productivity tools, raises another ethical dilemma. While nudging users toward positive behaviors (e.g., drinking water reminders) can be beneficial, manipulation crosses a line. For example, a meditation app that exploits anxiety to drive subscriptions exploits vulnerability rather than fostering well-being. Designers must balance efficacy with respect for user autonomy, ensuring that persuasive elements are optional and clearly labeled. A rule of thumb: if the user feels coerced rather than supported, the design has overstepped.

Comparing cognitive artifacts to physical infrastructure highlights the stakes. Just as a poorly designed bridge endangers lives, a flawed cognitive artifact can harm communities. For instance, facial recognition systems with racial biases disproportionately affect marginalized groups, while autocomplete features that suggest harmful content can escalate mental health crises. Designers must adopt a "do no harm" principle, prioritizing long-term societal impact over short-term gains. Collaboration with ethicists, psychologists, and affected communities can provide critical insights to avoid unintended consequences.

Ultimately, the moral responsibility in designing cognitive artifacts lies in treating them as political acts. Every choice—from data sources to user interfaces—shapes behavior and thought, often invisibly. Designers must embrace this accountability by adopting frameworks like value-sensitive design, which integrates ethical considerations from the outset. By doing so, they can create artifacts that not only function well but also uphold dignity, justice, and human flourishing. The question is not whether cognitive artifacts have politics, but whether their politics serve the greater good.

Scalia's Vote: Political Strategy or Judicial Integrity?

You may want to see also

Frequently asked questions

It means that tools, technologies, and systems designed to aid human cognition (like software, algorithms, or even language) embed values, biases, and power structures that can influence behavior, decision-making, and societal outcomes.

Cognitive artifacts can reinforce existing power dynamics, exclude certain perspectives, or prioritize specific goals. For example, algorithms that prioritize efficiency might marginalize certain communities, reflecting the political choices of their designers.

Cognitive artifacts are rarely neutral because they are designed with specific purposes, assumptions, and contexts in mind. Even seemingly neutral tools reflect the values and priorities of their creators, making them inherently political.

Examples include search engine algorithms that shape access to information, social media platforms that influence public discourse, and standardized tests that reflect specific cultural or educational norms. Each of these tools carries political consequences.

![2025 [Apple MFi Certified] (iOS Only) Bluetooth Tracker Smart Card for Apple Find My iPhone Wallet GPS Tracker, 5-10 Years Staying Power, Keys Finder and Item Locator for Keys, Bags, Passport](https://m.media-amazon.com/images/I/71CdY-j75OL._AC_UY218_.jpg)