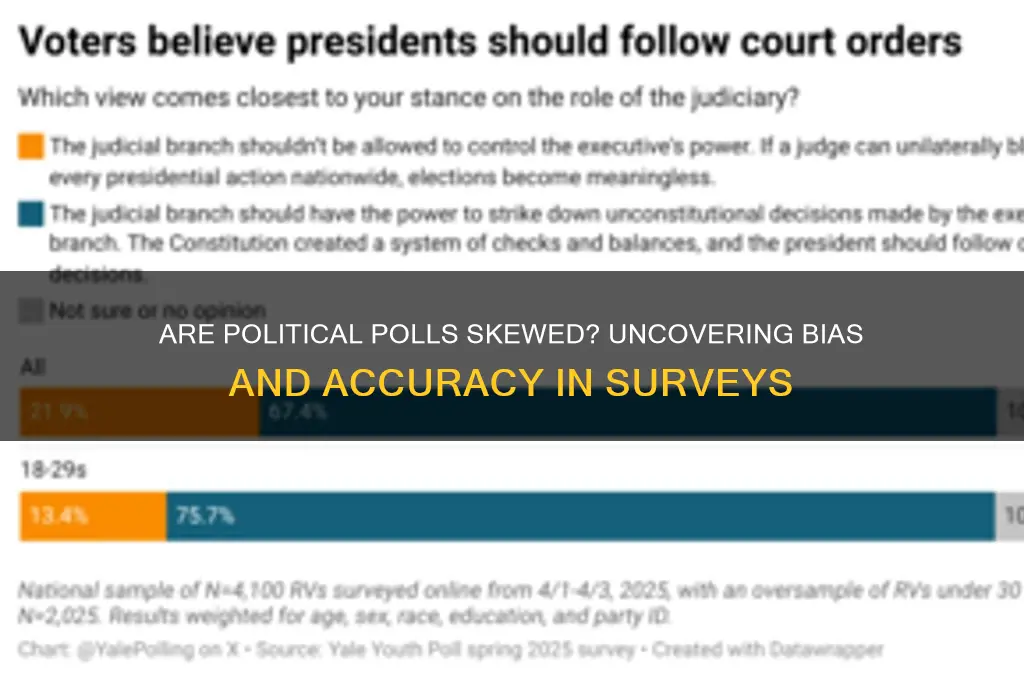

The accuracy and impartiality of political polls have long been a subject of debate, with many questioning whether these surveys are skewed to favor certain candidates, parties, or ideologies. Critics argue that factors such as sample selection bias, question wording, and response rates can significantly influence poll outcomes, potentially leading to misleading results. Additionally, the rise of partisan polling organizations and the increasing polarization of media outlets have raised concerns about intentional manipulation to shape public opinion. Proponents, however, contend that reputable polling firms employ rigorous methodologies to ensure fairness and reliability, though they acknowledge that no poll is immune to margin of error or unforeseen variables. As political landscapes grow more complex, the question of whether polls are skewed remains a critical issue for understanding public sentiment and electoral dynamics.

Explore related products

What You'll Learn

- Media Bias Influence: How media outlets selectively report polls to sway public opinion or narratives

- Sampling Methodology: Issues with representative samples, such as excluding key demographics or groups

- Question Wording: How phrasing poll questions can manipulate responses and skew results intentionally

- Response Rates: Low participation rates and their impact on poll accuracy and reliability

- Timing of Polls: How poll timing, especially near elections, can distort public sentiment snapshots

Media Bias Influence: How media outlets selectively report polls to sway public opinion or narratives

Media outlets wield significant power in shaping public perception, and their selective reporting of political polls is a prime example of this influence. Consider the 2016 U.S. presidential election, where some outlets emphasized polls showing Hillary Clinton with a comfortable lead, while others highlighted surveys suggesting a tighter race. This disparity wasn’t accidental—it reflected editorial choices driven by ideological leanings or the desire to drive engagement. By cherry-picking data, media organizations can amplify narratives that align with their agendas, subtly steering public opinion in a desired direction.

To understand this mechanism, examine how polls are framed. A poll showing a candidate leading by 5% might be reported as a "landslide" by one outlet and a "statistical tie" by another. Such framing isn’t just about numbers; it’s about storytelling. For instance, during the Brexit referendum, pro-Leave outlets often highlighted polls showing momentum for their side, while Remain-leaning media focused on polls predicting a narrow victory for staying in the EU. This selective emphasis creates a feedback loop: audiences hear what aligns with their biases, reinforcing their beliefs and polarizing the discourse further.

Practical steps can help consumers navigate this bias. First, cross-reference multiple sources to identify patterns or outliers. Second, scrutinize the poll’s methodology—sample size, demographic representation, and question wording can skew results. Third, pay attention to how the media presents the data. Are they focusing on a single poll or aggregating multiple surveys? Aggregation reduces bias by providing a broader perspective. Tools like FiveThirtyEight’s poll tracker can be invaluable for this purpose.

A cautionary note: media bias isn’t always overt. Subtle choices, like which polls to cover or how to visualize data, can sway interpretation. For example, a bar graph exaggerating small differences or a headline focusing on a single demographic group can distort the overall picture. Being aware of these tactics empowers readers to question the narrative rather than accept it at face value.

In conclusion, media outlets’ selective reporting of polls is a powerful tool for shaping public opinion. By understanding their strategies and adopting critical consumption habits, individuals can mitigate the influence of bias. The key lies in recognizing that polls are not neutral facts but pieces of a larger puzzle—one that media organizations often assemble to fit their preferred narrative.

Gentrification's Political Impact: Power, Displacement, and Urban Transformation Explained

You may want to see also

Sampling Methodology: Issues with representative samples, such as excluding key demographics or groups

Political polls often claim to reflect public opinion, but their accuracy hinges on one critical factor: sampling methodology. A representative sample must mirror the population it aims to describe, yet achieving this is fraught with challenges. Excluding key demographics—whether intentionally or due to logistical constraints—distorts results, rendering polls unreliable. For instance, phone-based surveys may underrepresent younger voters who rely on mobile phones and are less likely to answer unknown calls, while online polls can exclude older adults with limited internet access. This demographic skew undermines the poll’s ability to capture the true sentiment of the entire population.

Consider the 2016 U.S. presidential election, where many polls failed to predict Donald Trump’s victory. Post-election analyses revealed that some surveys underrepresented rural and working-class voters, groups that heavily favored Trump. This oversight wasn’t merely a statistical error; it was a methodological one. Pollsters often rely on convenience sampling, targeting easily accessible groups like urban residents or frequent survey respondents. Such shortcuts introduce bias, as these groups rarely reflect the diversity of the electorate. To avoid this, pollsters must employ stratified sampling, ensuring each demographic subgroup is proportionally represented. For example, if 20% of the population is over 65, the sample should reflect this distribution to maintain accuracy.

Another issue arises when certain groups are systematically excluded due to language barriers, geographic inaccessibility, or cultural reluctance to participate. Hispanic or immigrant communities, for instance, are often underrepresented in polls due to language differences or mistrust of surveyors. This exclusion is particularly problematic in regions where these groups constitute a significant portion of the electorate. Pollsters can mitigate this by offering surveys in multiple languages and partnering with community organizations to build trust. Additionally, using mixed sampling methods—combining phone, online, and in-person surveys—can help reach a broader audience.

Even when pollsters attempt to include diverse groups, response rates can skew results. Non-response bias occurs when those who choose to participate differ significantly from those who do not. For example, individuals with stronger political opinions are more likely to respond to polls, while apathetic voters may opt out. This amplifies extreme views and misrepresents the moderate majority. To counteract this, pollsters should adjust weights based on census data, ensuring the sample aligns with known population characteristics. However, this requires meticulous attention to detail and robust data sources, which not all polling organizations prioritize.

In conclusion, the integrity of political polls rests on their ability to include all relevant demographics. Excluding key groups—whether due to convenience, inaccessibility, or non-response—introduces bias that compromises accuracy. Pollsters must adopt rigorous sampling techniques, such as stratification and mixed methods, to ensure representation. By addressing these methodological issues, polls can better reflect public opinion and serve as reliable tools for understanding electoral dynamics. Without such measures, they risk perpetuating skewed narratives that misinform both the public and policymakers.

Is Pence's Political Career Over? Analyzing His Future in Politics

You may want to see also

Question Wording: How phrasing poll questions can manipulate responses and skew results intentionally

The way a question is phrased in a political poll can significantly influence the responses received, often leading to skewed results. Consider the following example: a poll asking, "Do you support the government's efforts to increase taxes on the wealthy to fund public education?" is likely to elicit more positive responses than one asking, "Do you approve of the government taking more money from successful individuals to pay for schools?" The former emphasizes the benefit (funding education), while the latter highlights the cost (higher taxes on the wealthy). This subtle shift in wording can manipulate public opinion, demonstrating how question phrasing is a powerful tool in shaping poll outcomes.

To craft unbiased poll questions, follow these steps: first, use neutral language that avoids emotional triggers or loaded terms. For instance, instead of asking, "Should we stop the reckless spending on unnecessary programs?" use "What is your opinion on the current allocation of government funds to various programs?" Second, ensure the question is clear and specific, avoiding ambiguity that could lead to misinterpretation. A vague question like "Do you think the economy is doing well?" may yield different responses than "Do you think the current unemployment rate is acceptable?" Lastly, test the question on a small, diverse group to identify potential biases or confusion before administering the poll to a larger audience.

A comparative analysis of two polls on the same topic can reveal the impact of question wording. In a study on climate change, one poll asked, "Do you believe human activity is the primary cause of global warming?" while another asked, "Do you think climate change is a natural phenomenon not influenced by human actions?" The first question, which frames human activity as the primary cause, received 65% agreement, whereas the second, which suggests natural causes, received only 35% agreement. This disparity highlights how leading questions can steer respondents toward a particular viewpoint, underscoring the need for careful question design to ensure accurate results.

Persuasive techniques in question wording often exploit cognitive biases, such as the tendency to agree with statements that align with one’s identity or values. For example, a poll targeting voters in a conservative district might ask, "Do you support protecting traditional family values by opposing same-sex marriage?" This phrasing appeals to the respondents' self-identification as conservatives, increasing the likelihood of agreement. To counteract such manipulation, poll creators should avoid framing questions in a way that presupposes a particular stance or appeals to specific demographics. Instead, they should focus on presenting the issue objectively, allowing respondents to form their own opinions without undue influence.

In practical terms, understanding the role of question wording in skewing poll results can help both creators and consumers of polls. For creators, it emphasizes the importance of rigorous question design and testing to ensure fairness and accuracy. For consumers, it serves as a reminder to critically evaluate poll questions before accepting the results as definitive. By being aware of how phrasing can manipulate responses, individuals can better interpret political polls and make more informed decisions based on the data presented. This awareness is particularly crucial in an era where polls play a significant role in shaping public discourse and policy decisions.

Karyn Polito's Age: Unveiling the Massachusetts Lieutenant Governor's Birth Year

You may want to see also

Explore related products

$16.99

Response Rates: Low participation rates and their impact on poll accuracy and reliability

Low response rates in political polls have become a critical issue, undermining the accuracy and reliability of survey results. Consider this: in the 1970s, response rates for telephone surveys averaged around 80%; today, they hover below 10%. This dramatic decline isn’t just a logistical headache—it’s a statistical nightmare. When only a small fraction of contacted individuals participate, the sample risks becoming unrepresentative of the broader population. Non-response bias creeps in, as those who do respond often differ systematically from those who don’t. For instance, older, more educated, and politically engaged individuals are more likely to answer polls, skewing results toward their perspectives. This imbalance can lead to predictions that miss the mark, as seen in the 2016 U.S. presidential election, where many polls underestimated support for Donald Trump.

To mitigate the impact of low response rates, pollsters employ weighting techniques, adjusting the data to match known demographic distributions. However, this method is only as good as the assumptions it relies on. If the underlying population has shifted in ways not captured by census data or other benchmarks, weighting can amplify errors rather than correct them. For example, if younger voters are underrepresented in the sample and their turnout patterns have changed, even weighted results may fail to reflect their true influence. This highlights a paradox: as response rates drop, the need for sophisticated adjustments grows, but the reliability of those adjustments diminishes.

Practical steps can be taken to improve response rates and, by extension, poll accuracy. First, diversify contact methods. While phone surveys remain common, incorporating text messages, emails, and even social media can reach broader demographics. Second, incentivize participation. Offering small rewards, such as gift cards or entries into prize drawings, has been shown to increase response rates by up to 20% in some studies. Third, keep surveys concise. Long, tedious questionnaires deter participation; limiting questions to 5–10 minutes can significantly boost completion rates. Finally, transparency builds trust. Clearly explaining how the data will be used and ensuring anonymity can encourage more people to engage.

Despite these efforts, low response rates will likely persist, necessitating a shift in how we interpret poll results. Users of political polls—from journalists to policymakers—must approach findings with caution, scrutinizing methodologies and margins of error. For instance, a poll with a 4% margin of error assumes a 95% confidence level, but this calculation relies on a representative sample, which low response rates jeopardize. Cross-referencing multiple polls and tracking trends over time can provide a more robust picture, smoothing out anomalies caused by non-response bias. Ultimately, while polls remain a valuable tool, their limitations must be acknowledged to avoid misplaced confidence in their predictions.

Political Turmoil's Grip: How Unrest Drives Global Migration Patterns

You may want to see also

Timing of Polls: How poll timing, especially near elections, can distort public sentiment snapshots

The timing of political polls, particularly those conducted in the weeks leading up to an election, can significantly distort the snapshot of public sentiment they aim to capture. Consider the 2016 U.S. presidential election, where polls consistently showed Hillary Clinton ahead, only for Donald Trump to win the Electoral College. One critical factor was the timing of late-breaking news, such as the FBI’s reopening of the investigation into Clinton’s emails just days before the election. Polls taken before this event failed to account for the shift in voter sentiment it triggered, illustrating how even minor timing discrepancies can skew results.

To understand this distortion, imagine polling as a photograph of public opinion. Just as a photo taken at dusk differs from one at noon, polls taken at varying times capture different realities. For instance, a poll conducted 30 days before an election might reflect voter intentions before debates, scandals, or advertising blitzes have fully influenced the electorate. Conversely, a poll taken 48 hours before Election Day might overrepresent undecided voters who break late toward one candidate, as seen in the 2020 U.S. election, where Biden’s lead appeared narrower in final polls than in the actual results. This volatility underscores the importance of interpreting poll timing as a variable, not a constant.

Practical tips for consumers of political polls include examining the field dates—the period when the poll was conducted—to assess how recent events might have been factored in. For example, a poll taken immediately after a high-profile debate may overstate the impact of a candidate’s performance, while one taken weeks later might understate it. Additionally, look for polls that track trends over time rather than relying on a single snapshot. A series of polls conducted weekly or biweekly can reveal whether shifts in sentiment are sudden or gradual, providing a more nuanced understanding of voter behavior.

A comparative analysis of poll timing in different electoral contexts further highlights its impact. In countries with shorter campaign periods, such as the United Kingdom, polls taken two weeks before an election may be more accurate than in the U.S., where campaigns stretch over months. Similarly, in systems with compulsory voting, such as Australia, late-breaking shifts in sentiment may be less pronounced, as voters have less flexibility to change their minds. These variations suggest that the optimal timing for polls depends on the specific electoral environment, making a one-size-fits-all approach unreliable.

In conclusion, the timing of political polls is a critical yet often overlooked factor in their accuracy. By understanding how poll timing interacts with external events and electoral dynamics, consumers can better interpret results and avoid being misled by distorted snapshots of public sentiment. Whether you’re a journalist, campaigner, or voter, recognizing the temporal limitations of polls is essential for making informed decisions in an increasingly volatile political landscape.

Exploring Jimmy Williams' Political Identity: Unraveling the Gay Speculation

You may want to see also

Frequently asked questions

Political polls are not inherently skewed, but they can be influenced by factors like sampling methods, question wording, and response biases. Properly conducted polls aim for accuracy, but errors can occur if these factors are not carefully managed.

While reputable pollsters adhere to ethical standards, there is potential for intentional skewing through biased question framing, selective sampling, or manipulation of data. Transparency in methodology is key to identifying trustworthy polls.

Political polls do not inherently favor one party, but perceptions of bias can arise if certain demographics are over- or under-represented. Accurate polling requires diverse and representative samples to avoid such skews.

Non-response bias occurs when certain groups are less likely to participate in polls, skewing results. For example, if younger voters are less likely to respond, the poll may overrepresent older voters' opinions, leading to inaccurate predictions.

![[200 PCS] 6 inch Bamboo Skewers, Premium Wooden Skewers Without Splinters, Skewers for Grilling, BBQ, Appetizer, Fruit Kabobs, Chocolate Fountain, Cocktail Toothpicks, and Food Skewer Sticks.](https://m.media-amazon.com/images/I/61Cm8fmaXcL._AC_UY218_.jpg)